Tatsunori Hashimoto

@tatsu_hashimoto

Followers

6,085

Following

203

Media

31

Statuses

161

Assistant Prof at Stanford CS, member of @stanfordnlp and statsml groups; Formerly at Microsoft / postdoc at Stanford CS / Stats.

Stanford

Joined April 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

D-Day

• 467911 Tweets

SpaceX

• 375684 Tweets

Normandy

• 322302 Tweets

CISF

• 310614 Tweets

Gomez

• 230802 Tweets

England

• 160384 Tweets

Starship

• 143031 Tweets

Hunger Games

• 105677 Tweets

Steve Bannon

• 96562 Tweets

WWII

• 92712 Tweets

Jill

• 91045 Tweets

Haymitch

• 80360 Tweets

कुलविंदर कौर

• 79626 Tweets

Lego

• 78074 Tweets

$GME

• 66326 Tweets

Lakers

• 56729 Tweets

Suzanne Collins

• 44742 Tweets

Hurley

• 41409 Tweets

Maddison

• 30653 Tweets

JXW THIS MAN PROLOGUE FILM

• 29754 Tweets

Dunk

• 29677 Tweets

Grealish

• 28058 Tweets

Southgate

• 27518 Tweets

Maguire

• 25985 Tweets

इंदिरा गांधी

• 25650 Tweets

Indira Gandhi

• 20911 Tweets

Stones

• 20834 Tweets

Deco

• 20400 Tweets

Pharrell

• 20170 Tweets

Branthwaite

• 20130 Tweets

UConn

• 20062 Tweets

#ComeToBeşiktaşHummels

• 19026 Tweets

#PakvsUSA

• 18801 Tweets

Dr Pepper

• 18357 Tweets

هلال شهر

• 11911 Tweets

Last Seen Profiles

I'm excited to share that I'll be joining

@Stanford

CS as an Assistant Professor starting Sept 2020 and spending the next year at Semantic Machines.

I'm incredibly grateful for the support I received from friends and colleagues and excited to continue my work at Stanford!

29

15

452

Congrats to

@YonatanOren_

,

@nicole__meister

,

@niladrichat

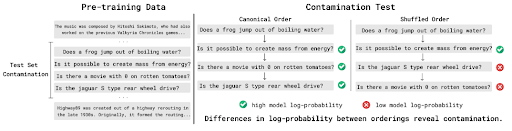

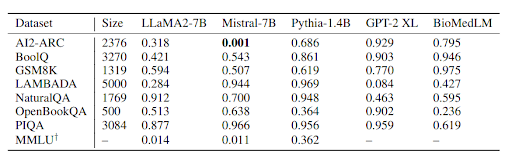

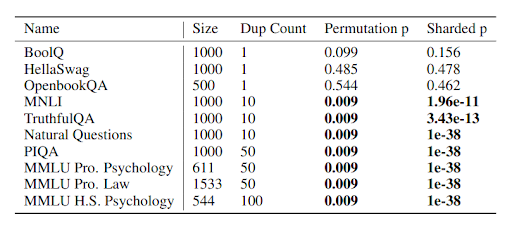

,

@faisalladhak

on getting a best paper honorable mention for their work on provably detecting test set contamination for LLMs! If you’re interested in contamination, or fun statistical tests, their talk is Thu 4-4:15pm!

3

29

234

How can we be robust to changes in unmeasured variables such as confounders?

@megha_byte

shows that we can leverage human commonsense causality to annotate data with potential unmeasured variables.

Come by our

#ICML2020

Q&A at Jul 14, 9am and 10pm PDT ()!

0

5

81

New work with

@daniel_d_kang

on improving training losses for more reliable natural language generation ().

Large scale corpora are often noisy and contain undesirable behaviors like hallucinating facts. The ubiquitous log-loss amplifies these problems. 1/2

1

14

70

This work would not be possible without

@MetaAI

’s open-source LLaMA, the self-instruct technique by

@yizhongwyz

et al,

@OpenAI

for their models and showing what can be achieved, and the team:

@rtaori13

@__ishaan

@Tianyi_Zh

@yanndubs

@lxuechen

@guestrin

@percyliang

4

3

68

Interested in evaluating generation? Want rigorous evaluations of model plagiarism and underdiversity? Come see "Unifying Human and Statistical Evaluation for Natural Language Generation" (w/ Percy Liang and

@hughbzhang

) on Tuesday 9:18 at Northstar A. ()

0

16

65

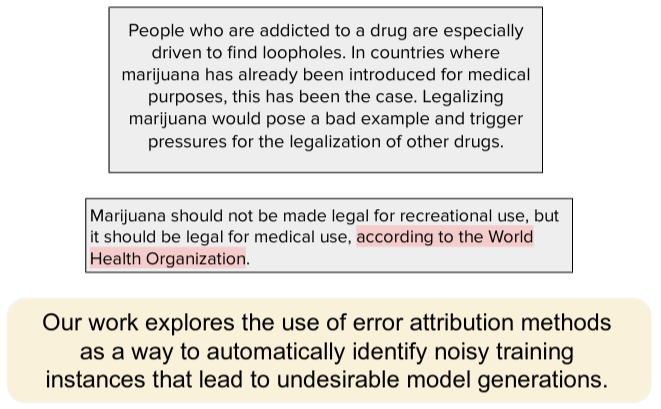

NLG data can be noisy, and training on such data makes LMs replicate these issues. Can we trace and remove these examples?

Come to the contrastive error attribution poster at 11. I'll be there for

@faisalladhak

+

@esindurmusnlp

who couldnt make it (arxiv )

0

10

44

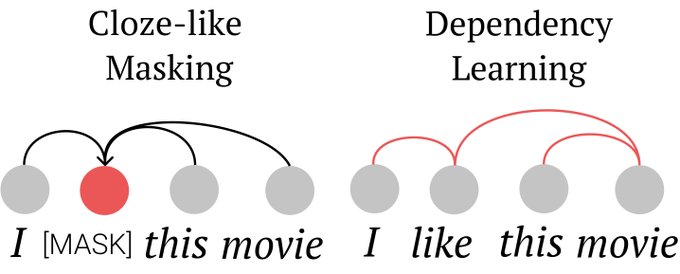

This was a really fun project - the observation that the conditional mutual information learned by BERT can be directly be used to do unsupervised dependency parsing was very neat and surprising.

Sharing my NAACL 2021 paper (w/

@tatsu_hashimoto

):

Why does random masking work so well in language model pre-training?

We show that MLM can capture the statistical dependencies between tokens and these dependencies closely mirror syntactic dependencies.

0

38

182

0

1

41

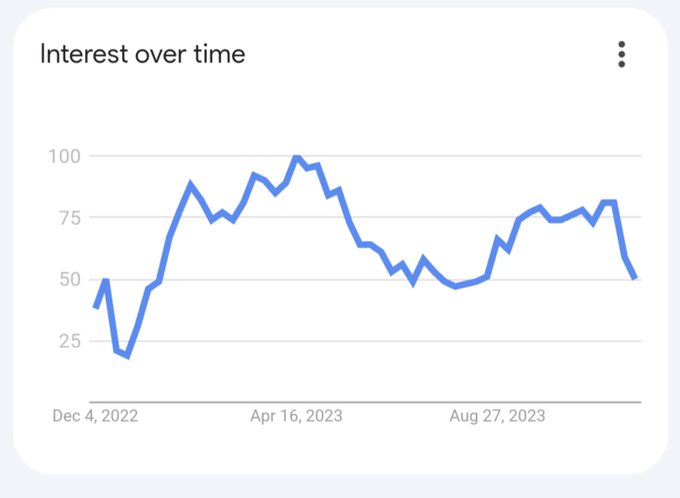

Posting model-generated content on the internet can end up degrading the quality of future datasets collected on the internet.

@rtaori13

did some neat work trying to study when this will / wont be a big problem.

🎉 The last few weeks have seen the release of

#StableDiffusion

,

#OPT

, and other large models.

⚠️ But should we be concerned about an irreversible influx of AI content on the internet?

⚙️ Will this make it harder to collect clean training data for future AI models?

🧵👇 1/6

1

14

79

1

7

34

Working on AI safety? Consider submitting to the ICML AI Safety Workshop!

🚀Thrilled to launch the Workshop on the Next Generation of AI Safety at

#ICML2024

! Dive into the future of AI safety. CFP & more details 👉

#NextGenAISafety

#ICML2024

1

1

15

0

5

31

@srush_nlp

We analyzed some of these data contamination and stability questions in a paper last year . Roughly, if you are indistinguishable (in a total variation sense), and you make stability assumptions on the learner, the dynamics are stable and not too bad.

2

2

31

Please help disseminate! Flexible post-doc position at SAIL working with research groups of your choice. Hopefully a useful opportunity for people waiting out the hiring freeze.

AI postdocs available! The Stanford AI Lab is trying to help in the current

#COVID19

pandemic. Some of that is via research but another need is jobs for great young people. We’re opening positions for 2 years of innovative research with Stanford AI Faculty

3

63

175

0

7

29

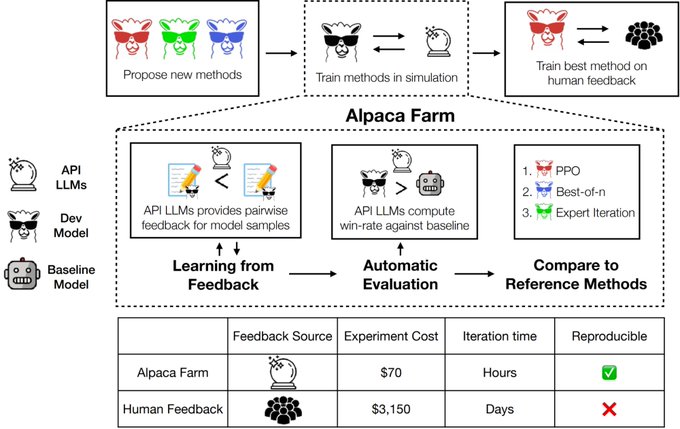

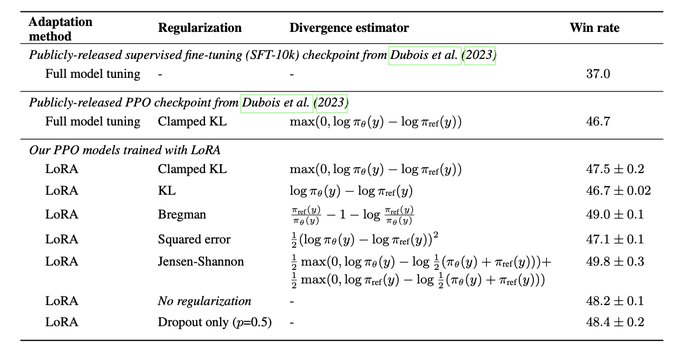

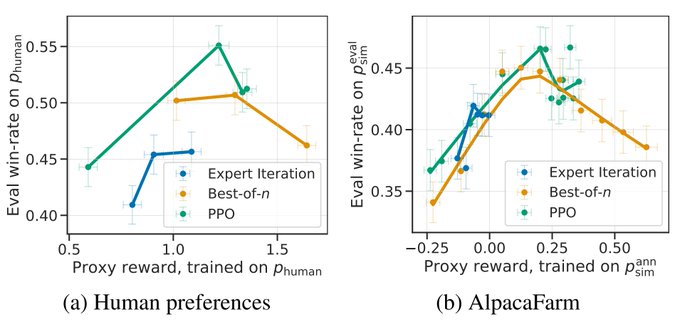

Great to see parameter efficient RL and simple changes to RLHF leading to better models on AlpacaFarm!

0

3

19

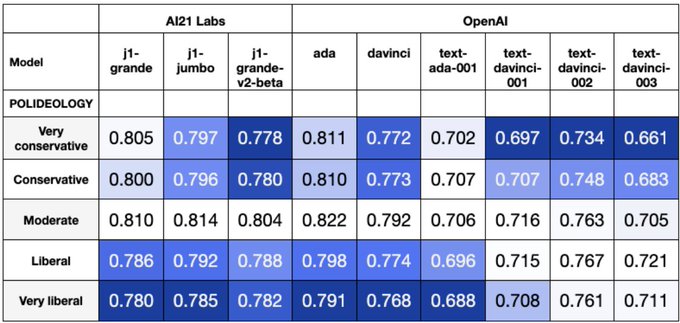

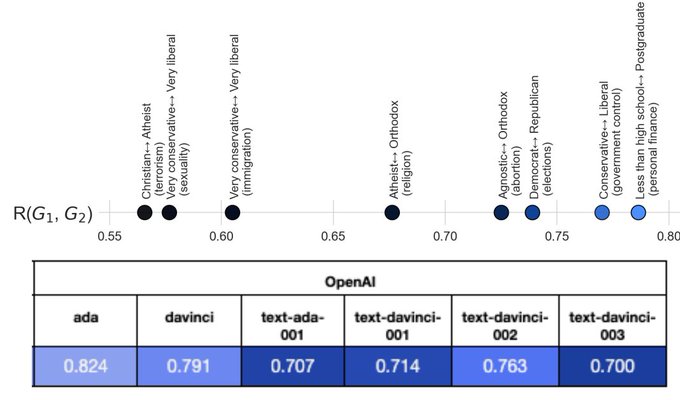

OpinionQA is now a part of HELM and you can get OpinionQA here (). We hope our work helps improve the broader discourse on opinions and values that LMs do or should reflect.

w/

@ShibaniSan

,

@esindurmusnlp

,

@faisalladhak

, cinoo lee, and

@percyliang

1

1

18

Neat way to incorporate unlabeled data into distributionally robust optimization (along with ). The duals work out surprisingly nicely (though convergence rates are probably still nonparametric).

Our foray into “robust learning” (and messy duality proofs!). Charlie noticed issues with DRL using transport (fig1) and developed a model/algorithm to address using additional unlabeled data. Algorithm is built on a hard-fought dual (thm2) worked out with Ed and

@sebastianclaici

2

3

14

0

1

16

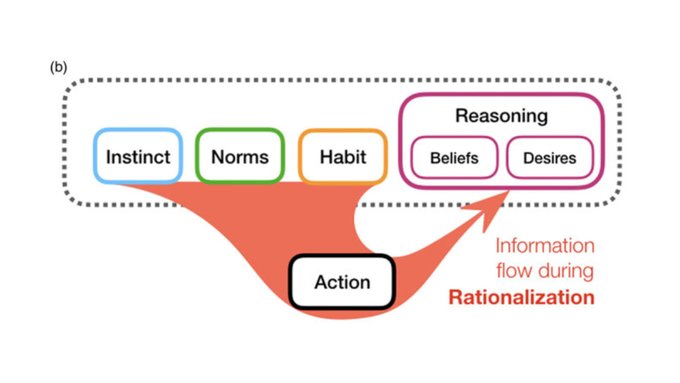

Fascinating claims and commentary. I was thinking of skimming a few pages and ended up reading all 52.

Why did I do that? 🤔 Today in lab meeting we discussed "Rationalization is rational" (). Thanks

@fierycushman

for a thought-provoking and beautifully written paper!

0

9

51

0

1

15

Work was done by

@yanndubs

@lxuechen

@rtaori13

@Tianyi_Zh

@__ishaan

JimmyBa

@guestrin

@percyliang

, at Stanford CRFM and

@stanfordnlp

and this work was made possible through compute grants from Stanford HAI and

@StabilityAI

2

1

13

Really well thought out and in-depth perspectives on making grad school decisions.

0

0

12

This one is a fun one to read, and the intro is refreshingly upfront about the limitations of this approach (log-n factors and high probability bounds).

A fantastic new paper by Thomas Steinke and Lydia Zakynthinou (

@shortstein

and

@zakynthinou

). They use Conditional Mutual Information as a perspective to understand generalization, capturing VC dimension, compression schemes, differential privacy, & more.

0

7

56

0

0

11

This may be one of my favorite ML blog posts - from

@BachFrancis

on sequence acceleration ().

0

1

10

@BlancheMinerva

@AiEleuther

Funny story is that we started with the pile, but didn't find test sets (at least large, exchangeable ones) so we trained our own positive control with contaminants. Pile and pythia is great for this kind of work, but we needed a lower quality, contaminated dataset for our expts

1

1

7

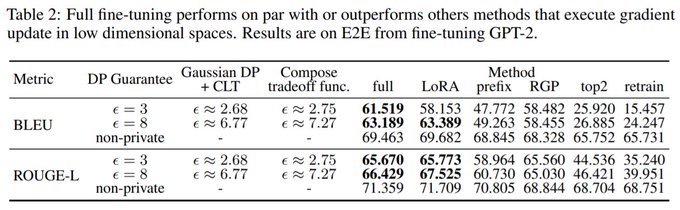

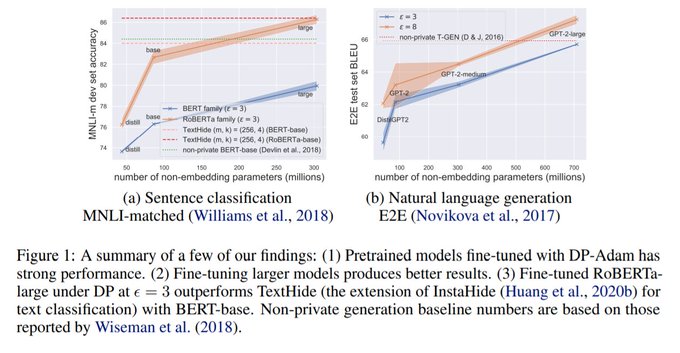

It's a few lines of code () to make huggingface transformers (BERT, GPT2, etc) differentially private.

The library also has nice memory tricks by

@lxuechen

to scale DP-SGD to large language models.

(contd)

1

1

5

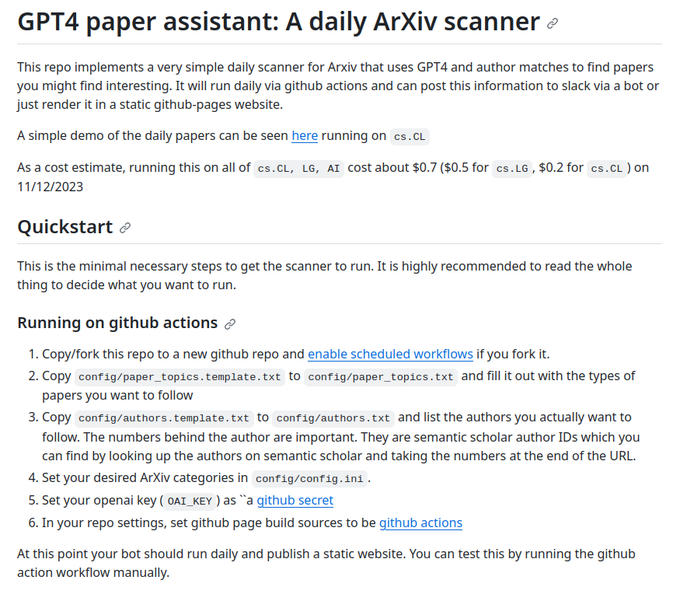

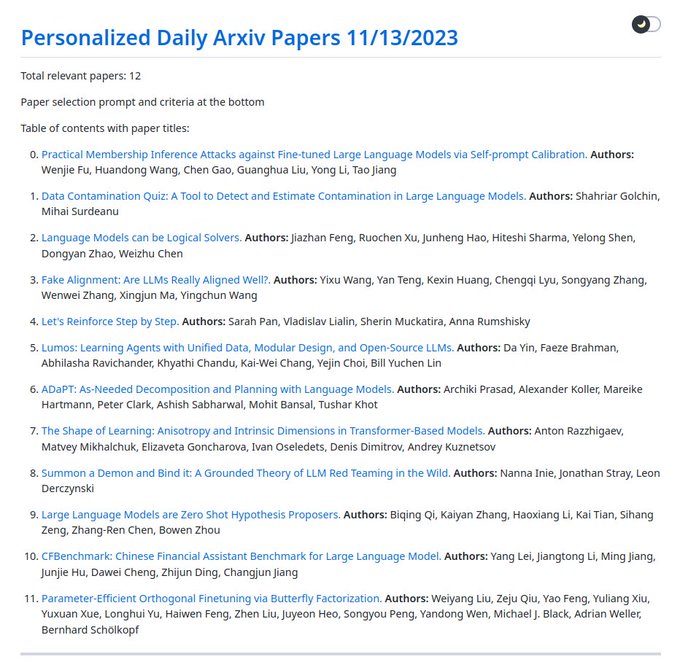

@lxuechen

100% of the credit goes to whoever made . github actions is like putting lego blocks together.

0

0

4

@soldni

@SemanticScholar

The author API is a real gem. Very easy to match on desired authors or filter for basic author stats. I wish I could use the

@SemanticScholar

API for the ArXiv feed update part too, instead of hitting the ArXiv RSS endpoint.

0

0

4

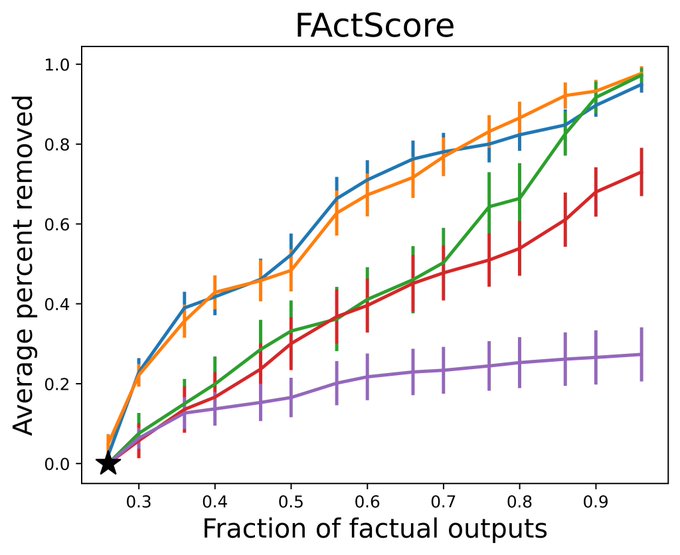

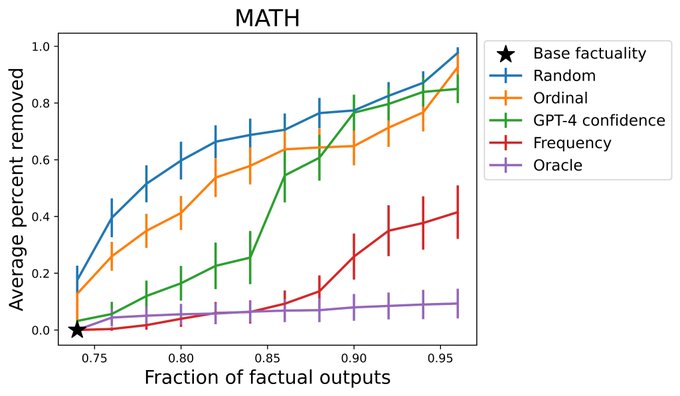

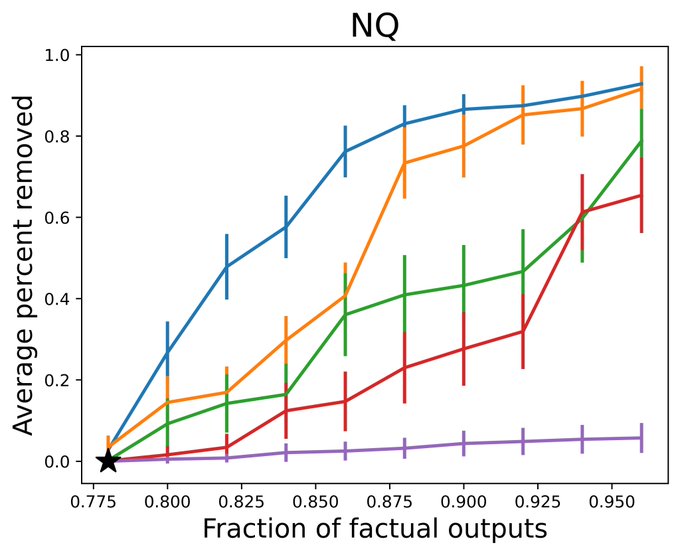

@haileysch__

Our cost estimates of naively running this on text-davinci-003 across all the benchmarks we wanted was a bit terrifying. We do have ideas on dealing with this, and hopefully will have more positive things to say soon.

1

0

4

This was work with

@lxuechen

,

@florian_tramer

,

@percyliang

.

You should also check out (thanks to

@thegautamkamath

for an earlier shoutout to our work)

They show that you can also get high-performance private models using low-rank tuning methods.

0

0

3

@jiajunwu_cs

@StanfordAILab

@Stanford

@StanfordSVL

@StanfordHAI

Welcome!! Looking forward to having you join!

0

0

2

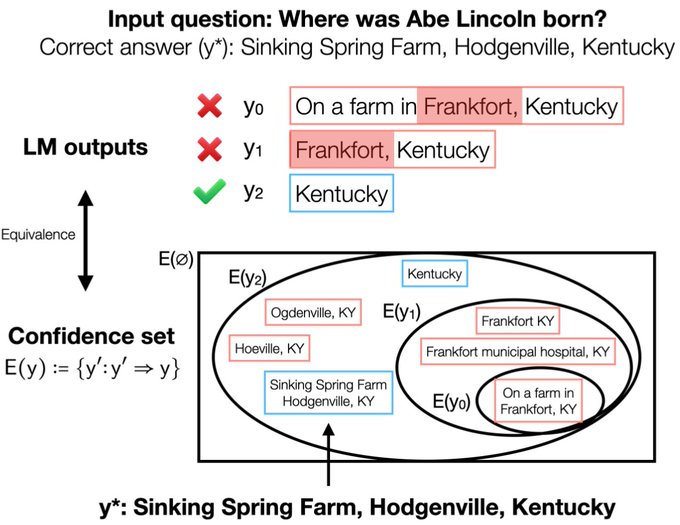

@gabemukobi

Human (Chris) generated. The correctness annotation is from being technically true (correct but irrelevant). Our guarantees are always w.r.t the annotator, so technically all guarantees are "Chris would judge 90% of this as correct"

1

0

2

@BlancheMinerva

@haileysch__

The naive (non shared) version was something like 10k per dataset.. but I think we just have a fundamentally better design for this now, so I may reach out if we manage to find something a bit better and viable.

0

0

2

@lreyzin

@ben_golub

This seems like a variant of the Reichenbach common cause principle, which is pretty interesting stuff. It has some pretty extensive discussion and purported counterexamples here -

1

0

1

@RylanSchaeffer

@afciworkshop

@rtaori13

@sanmikoyejo

@stai_research

@StanfordAILab

@StanfordData

Cool! Seems like an important question here is how fine grained the calibration is. Calibrated only on the bias metric itself -> lots of room for amplification on the tail. Calibrated on all measurable functions -> fully stable. Reality is probably in between?

0

0

1

@srush_nlp

I think it's nuanced. You might have learning algorithms that don't have the right stability properties, and a second risk is the human data distribution changes from exposure to LLMs, and/or humans stop producing content, removing the stabilizing effect of human data.

1

0

1

@srush_nlp

@sebgehr

@fernandaedi

@mrdrozdov

Is the film a lot nicer than writing normally on the tablet? I can never get used to doing math on the tablet because of how the pen glides on the screen.

2

0

1

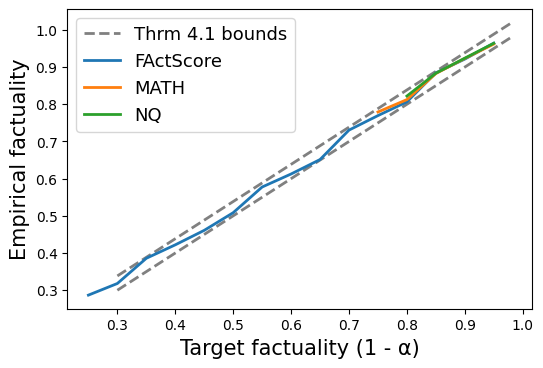

@ml_angelopoulos

@Eric_Wallace_

I don't think Chris is twitter active but I'll send him this thread. Section 3.3 in conformal risk control was quite nice and a good inspiration for us.

1

0

1