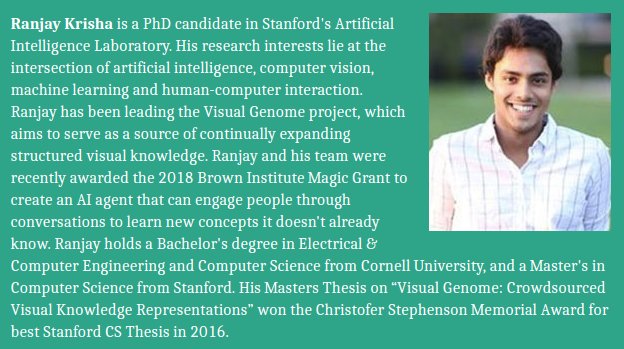

Ranjay Krishna

@RanjayKrishna

Followers

4,947

Following

420

Media

190

Statuses

1,769

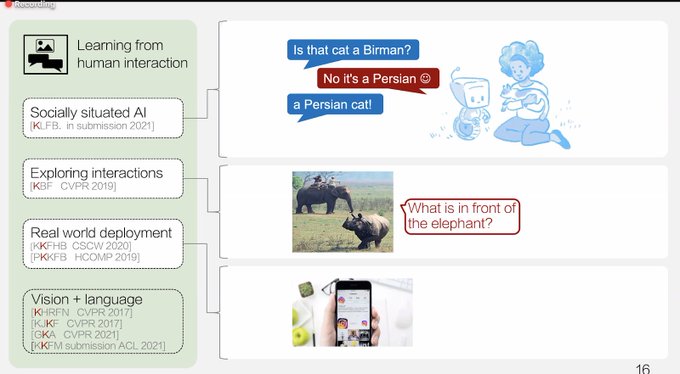

I teach machines to see and interact with people. + Assistant Professor @UWcse - Prev. Research scientist @MetaAI - PhD @StanfordAILab - Instructor @Stanford

California, USA

Joined August 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

WIN HA TIFFANY BANGKOK

• 201610 Tweets

キスの日

• 201524 Tweets

Kabir Is God

• 57719 Tweets

#ティアラとKPが贈る6歳誕生日会生配信

• 46758 Tweets

ब्रह्माकुमारी पंथ

• 40731 Tweets

フラワームーン

• 29583 Tweets

#素のまんま

• 29055 Tweets

Nadal

• 17835 Tweets

#THE夜会

• 17823 Tweets

TheWallSong x KHUNPOLCOPPER

• 16591 Tweets

揖保乃糸

• 14545 Tweets

Alas Pilipinas

• 13599 Tweets

#ドラクエの日

• 11831 Tweets

オースティン

• 11254 Tweets

#かみさま登場

• 10492 Tweets

Angel Canino

• 10425 Tweets

みそきん再販

• 10178 Tweets

Last Seen Profiles

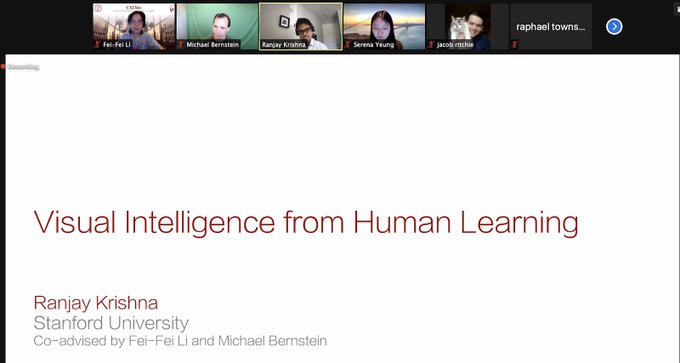

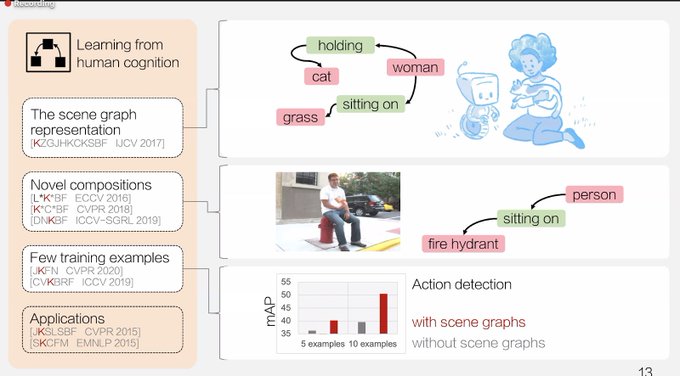

I successfully defended my PhD a few days ago. Huge thanks to my amazing advisors

@drfeifei

and

@msbernst

for supporting me throughout my journey.

A hearty congratulations to my student

@RanjayKrishna

(co-advised by

@msbernst

) for a successful PhD thesis defense! Great pioneering work in combining human cognition, human-computer interaction and

#AI

! Thank you PhD committee members

@chrmanning

@syeung10

@magrawala

🌹

12

23

483

24

7

319

Our submission received my first ever 10/10 review from NeurIPS. Check out our

#NeurIPS2023

Oral.

We release the largest vision-language dataset for histopathology and train a SOTA model for classifying histopathology images across 13 benchmarks across 8 sub-pathologies.

Quilt-1M has been accepted for an oral presentation at

@NeurIPSConf

. As promised, we have also released our data and our model:

See you all in New Orleans!

0

15

69

2

26

206

Our new paper finds something quite neat: We easily scale up how many tools LLMs can use to over 200 tools (APIs, models, python functions, etc.)

...without any training, without a single tool-use demonstration!!

9

27

162

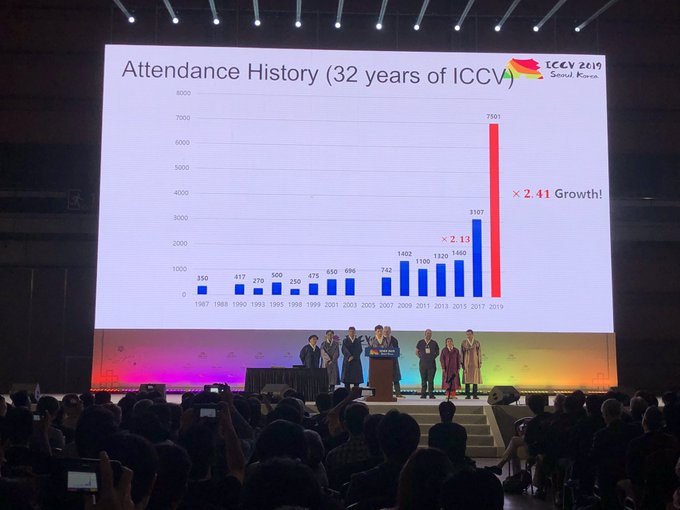

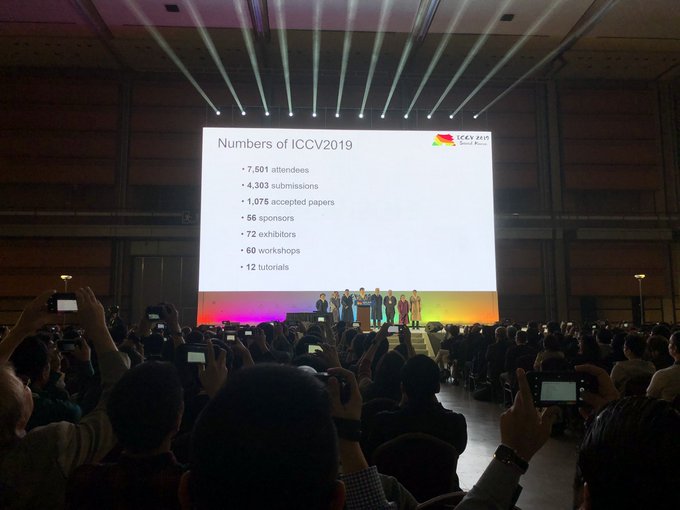

On my way to Seoul for

#ICCV2019

. If you’re at the conference on October 28th, come check out a full day workshop I am organizing on Scene Graph Representation and Learning (). We have a great lineup of speakers and posters.

4

16

130

Academic quarter recap: here's a staff photo after the last lecture of

@cs231n

. It's crazy that we were the largest course at Stanford this quarter. This year, we added new lectures and assignments (open sourced) on attention, transformers, and self-supervised learning.

2

2

128

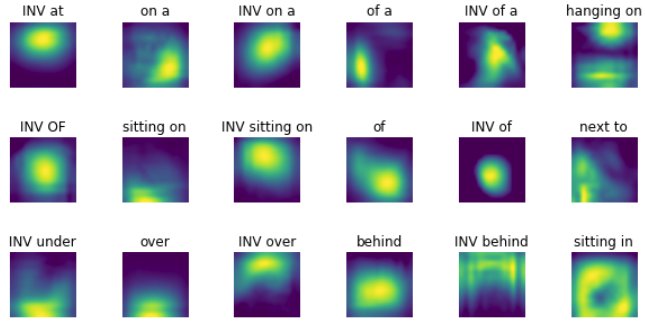

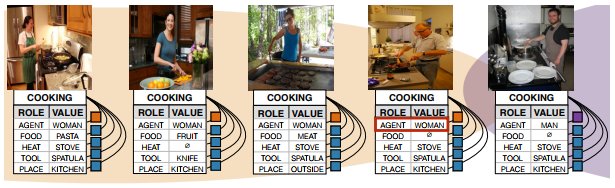

Someone made an in-depth video of our recent work at

#CVPR2018

on Referring Relationships. If you are interested in how we train models to disambiguate between different people or objects in images, go check it out.

#ComputerVision

#MachineLearning

1

40

110

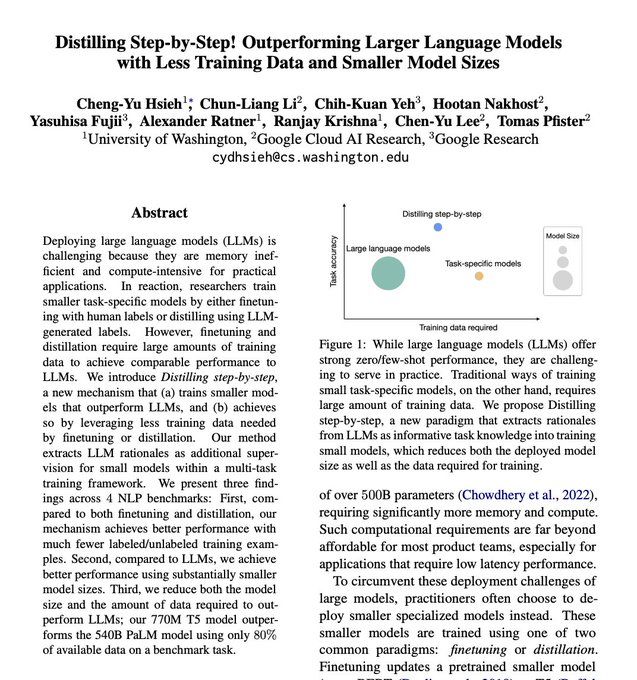

Deploying LLMs continues to be a challenge as they grow in model size and consume more data. We introduce a simple distillation mechanism to make even 770M T5 models outperform 540B PaLM.

Led by my PhD student

@cydhsieh

and with collaborators

@chunliang_tw

@ajratner

0

23

104

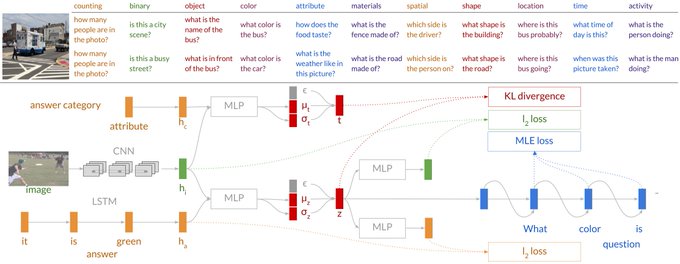

NEW dataset, NEW task, NEW model for dense video captioning. Work done with

@kenjihata

,

@drfeifei

and

@jcniebles

.

1

43

90

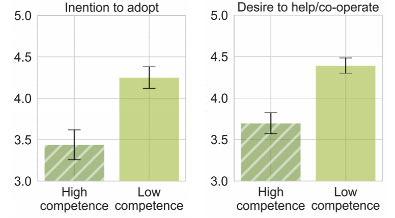

If you're releasing a new user-facing AI project/product, you might want to read our new

#CSCW2020

paper. We find that words or metaphors used to describe AI agents have a causal effect on users' intention to adopt your agent. Thread👇

2

18

94

Human-centered AI is no longer just a buzzword. It's a thriving, growing area of research. Come to our workshop tomorrow at

#ICML2023

to learn about it.

AI models have finally matured for mass market use. HCI+AI interactions will only become more vital.

1

11

85

Award for the most creative and least informative poster at

#cvpr2017

YOLO9000: better, faster, stronger!!

1

13

62

Congrats to Amir Zamir

@zamir_ar

, Silvio Savarese

@silviocinguetta

and co-authors for their Best paper award at

#CVPR2018

“Taskonomy: Disentangling Task Transfer Learning”

0

10

61

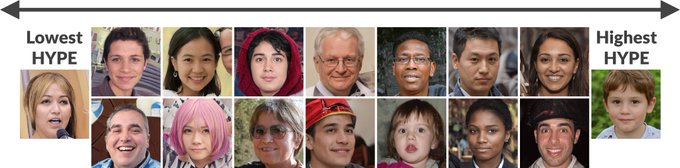

We updated our generative human evaluation benchmark with 6 GANs, 4 image datasets (generating faces and objects), 2 sampling methods. We show statistically insignificant correlation with FID and other automatic metrics. Use HYPE ()!

1

18

56

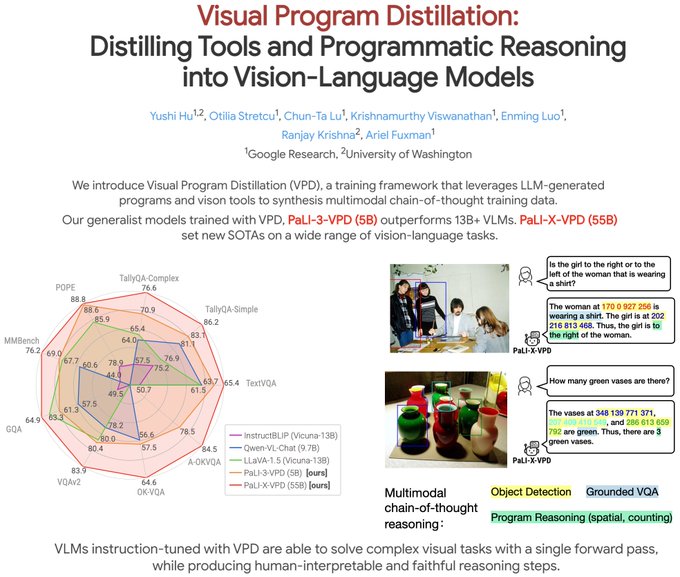

📈New paper! 1 training method, no new architecture, no additional data, SOTA results on 8 vision-language benchmarks.

Our 5B model variants even outperform 13B+ models!

Multimodal reasoning is hard. Even the best LMMs struggle with counting😥 Any fix for it?

Introduce VPD from

@GoogleAI

: we teach LMMs multimodal CoT reasoning with data synthesized from LLM + vision tools, and achieve new SOTAs on many multimodal tasks!🥳

7

69

264

1

10

62

With the ICCV ban finally lifted, here is our new

#ICCV2023

paper, which already has a few follow up papers.

Our method faithfully evaluates text-to-image generation models. It provides more than just a score; it identifies missed objects, incorrect attributes, relationships, etc

It is notoriously hard to evaluate images created by text-to-image models. Why not using the powerful LLMs and VLMs to analyze them?

We introduce TIFA🦸🏻♀️

#ICCV2023

, which uses GPT + BLIP to quantitatively measure what Stable Diffusion struggles on!

Proj:

2

24

86

0

8

58

New paper: Real-world image editing that is consistent with lighting, occlusion, and 3D shapes💀🧊🎧⚽️🍎!

We introduce a new 3D image editing benchmark called OBJect. Using OBJect, we train 3DIT, a diffusion model that can rotate, translate, insert, and delete objects in images.

0

8

48

New paper! Now that self-supervision for high-level vision tasks have matured, we ask what is needed for pixel-level tasks?

Given cog.sci. evidence, we show that scaling up learning multi-view correspondences improves SOTA on depth, segmentation, normals, and pose estimation.

1

7

42

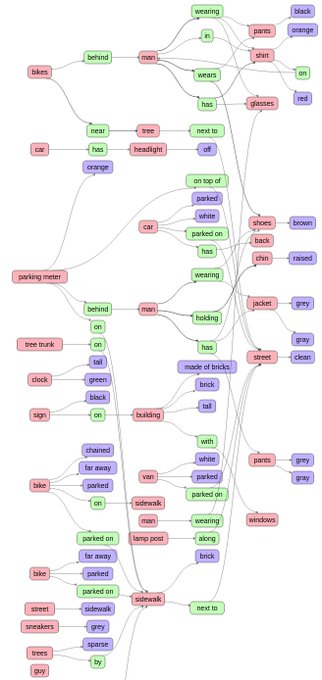

For researchers working on scene graphs or visual relationships, I just open sourced a simple library to easily visualize

#SceneGraphs

.

Now you can directly use this to generate your qualitative results in your publications.

1

4

42

Make sure to check out this new

#documentary

on PBS (

@novapbs

): "Can we build a brain?" with Fei-Fei (

@drfeifei

) and me. Check out the trailer here.

4

7

40

Hey everyone, we have a great lineup of speakers at our upcoming workshop on the importance of Compositionality in Computer Vision (), at

#CVPR2020

(with

@eadeli

,

@jcniebles

,

@drfeifei

,

@orussakovsky

). Consider submitting a paper. Also, stay safe.

0

4

36

@jebbery

And then when you start your job:

First task: What is 26 + 99?

Me: It's 19.

#Overfitting

1

2

33

Happy Thanksgiving everyone! We have released code and a demo for our

#ICCV2019

paper on Predicting Scene Graphs with Limited Labels. Check out

@vincentsunnchen

's GitHub repository here:

0

7

35

If you are attending

#CVPR2020

, we have some exciting things for you to attend and check out: 1) Come to our (w

@drfeifei

@jcniebles

@eadeli

Jingwei) workshop on Sunday on Compositionality in Computer Vision. We have an amazing line up of speakers.

1

1

30

Our work on dense

#video

#captioning

was featured in

#techcrunch

. Collaborators -

@drfeifei

,

@kenjihata

@jcniebles

2

8

32

Training robots requires data—which today is hard to collect. You need (1) expensive robots, (2) teach people to operate them, (3) purchase objects for the robots to manipulate.

Our

#CoRL2023

paper shows you don't need any of the 3, not even a robot! All you need is an iPhone.

0

1

32

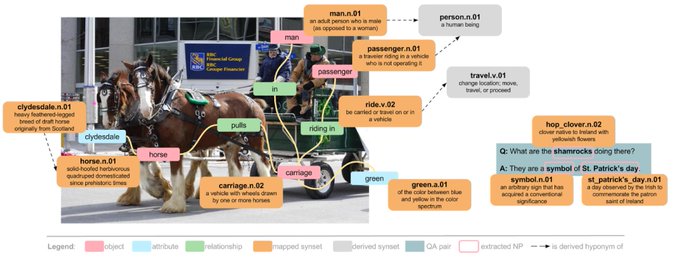

Visual Genome paper has now been released. Project advised by

@drfeifei

@msbernst

@ayman

@lijiali_vision

2

16

27

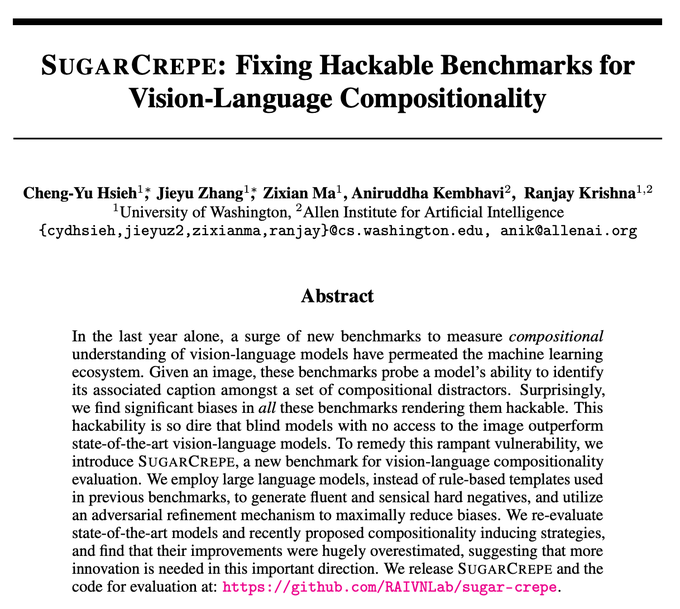

At

#CVPR2023

this year, I had a number of conversations about how we need a faithful benchmark for measuring vision-language compositionality.

SugarCrepe is our response. Our best models are still not compositional. It's time to make some progress!

0

3

30

Just redesigned and will be teaching a fun new course on

#computervision

with

@jcniebles

@Stanford

. Go check it out:

3

7

29

Funniest quote from

#iccv2019

: “strongly supervised learning is the opium of machine learning and now we are all hooked on it”

0

2

28

@CloudinAround

@joshmeyerphd

@AndrewYNg

Maybe you should read about what the problem really is before commenting. The waves tuition will be taxable under the new bill - making out PhD unaffordable.

0

0

26

Today we open sourced all 9 assignments for the

#ComputerVision

class I teach

@Stanford

with

@jcniebles

- allowing everyone to learn various concepts like lane detection, deformable parts, segmentation, dimensionality reduction, optical flow, etc.

1

10

26

There are so many vision-language models: OpenAI’s CLIP, Meta’s FLAVA, Salesforce’s ALBEF, etc.

Our

#CVPR2023

⭐️ highlight ⭐️ paper finds that none of them show sufficient compositional reasoning capacity.

Since perception and language are both compositional, we have work to do

Have vision-language models achieved human-level compositional reasoning? Our research suggests: not quite yet.

We’re excited to present CREPE – a large-scale Compositional REPresentation Evaluation benchmark for vision-language models – as a 🌟highlight🌟at

#CVPR2023

.

🧵1/7

3

14

69

0

1

27

Check out this new workshop and benchmark for studying vision systems that can navigate as social agents amongst people -- by my colleagues (

@SHamidRezatofig

) at

@StanfordSVL

.

0

5

24

@fchollet

It very much depends on the act function but for most cases, you want to use conv-bn-act. Without bn before act, saturated neurons will kill gradients. We do case studies of this across multiple activation functions in these slides:

2

0

25

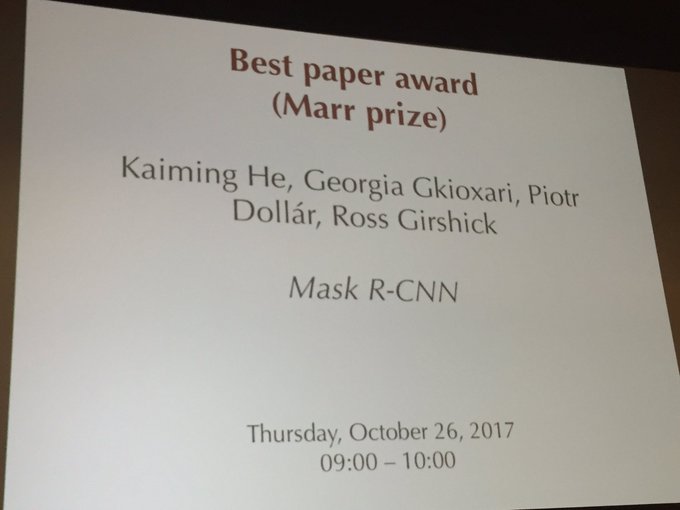

Extremely proud of my lab mates for winning best paper award at

#ICRA2019

on their work on self-supervised learning that combines vision and touch.

0

3

22

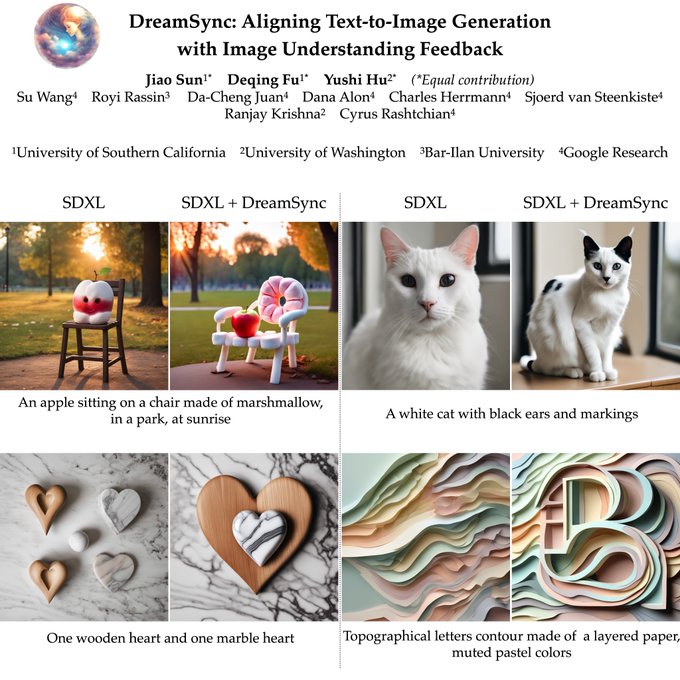

New paper: DreamSync improves any text-to-image generation model by aligning it better with text inputs.

We use DreamSync to improve stable diffusion XL.

Generated images not following your prompt?

Introducing 𝔻𝕣𝕖𝕒𝕞𝕊𝕪𝕟𝕔 from

@GoogleAI

: improving alignment + aesthetics of image generation models with feedback from VLMs!

✅ Model Agnostic

✅ Plug and Play

❌ RL

❌ Human Annotation

❌ Real Image

5

70

323

0

2

25

Our paper has been accepted at

#ICCV2019

. Come check us out in Seoul later this year. In the meantime, we are planning on releasing code soon.

2

0

23

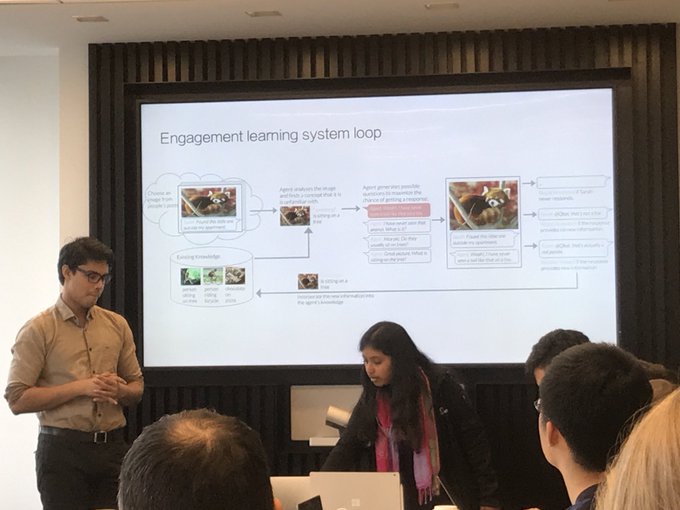

I will be speaking at an event tomorrow at

@Stanford

on the importance of

#Trust

and

#Transparency

in Human-AI collaboration. Come stop by to hear about how we can build dynamic learning systems that can continuously learn from interactions with humans.

3

5

23

For those who enjoy deep learning videos,

@labs_henry

's YouTube channel just made an easy to digest video summarizing our ACL paper ():

1

5

19

Speaking of collection behavior, check out our new paper at NeurIPS 2022. Inspired by how animals coordinate to accomplish tasks, we design a simple multi-agent intrinsic reward that allows decentralized multi-agent training, allowing AI agents to even adapt to new partners.

1

2

21

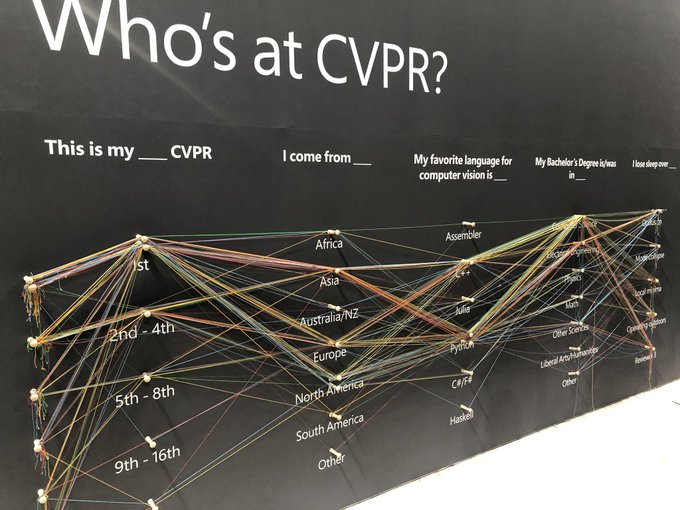

Lots of first time

#cvpr2019

attendees from Asia who code in python and studied computer science

0

2

20

Blog post explaining the math behind all the generative adversarial network papers

#GAN

. It's a fun short read.

0

4

19

Google translates Turkish gender neutral "O bir doktor. O bir hems¸ire." to "He is a doctor. She is a nurse."

#bias

2

10

17

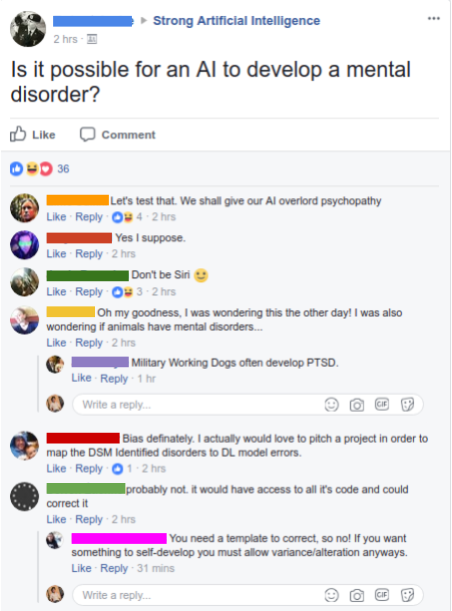

Joined a

#AI

and

#deeplearning

group on Facebook. Starting to realize that the public has no idea what

#ArtificialIntelligence

actualy is.

4

1

15

Check out our research work on how to design chatbots that people want to adopt!!

Consumers have consistent personality preferences for their online friends, new

@StanfordHAI

research shows.

2

14

50

0

1

16

Old-school research presentations can be boring. Check out this fun creativity skit Helena put together to explain our new CSCW paper.

TLDR: Recent works keep finding that AI explanations don't help people make better decisions. We propose a theory for when they do help!

0

2

16

If you’re at

#ICCV2023

, reach out and come say hi. I will be giving two talks:

- one at the closing the loop in vision and language workshop:

- one at the scene graph workshop:

1

0

16

On a personal note, I am going to miss co-instructing with

@danfei_xu

and

@drfeifei

. This is my 5th and last time instructing at Stanford. I have learned so much from working with so many amazing teaching assistants and students. Thank you, everyone.

1

0

16

@chipro

It's subjective. I would personally say scaling up models *IS* academic research. It's easy to dismiss it as not innovative. But research is also about studying the outcomes of design decisions/interventions. In this case, the intervention is increasing model size.

0

0

14

I have been extremely lucky to have

@timnitGebru

as a labmate and as a friend. Thank you for sharing your brilliant work and always being generous with your precious time. I am appalled you are dealing with this. I am here to support and help in any way I can.

I was fired by

@JeffDean

for my email to Brain women and Allies. My corp account has been cutoff. So I've been immediately fired :-)

390

1K

7K

0

0

14

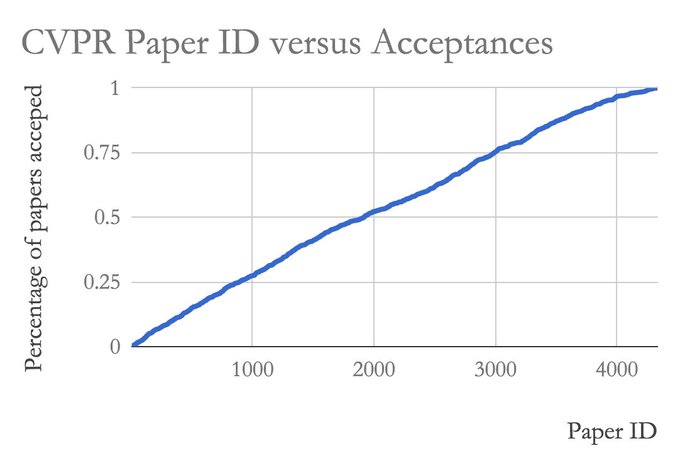

#CVPR2018

results just came out!! There doesn't appear to be any correlation between paper ID and whether your paper will get accepted, unlike past vision conferences.

0

7

13

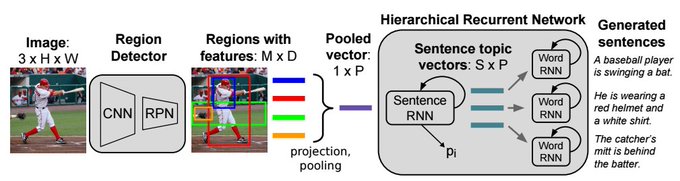

Check out our latest work on Generating Descriptive Image Paragraphs with

@jkrause314

and

@drfeifei

0

4

13

Here is a neat visualization exploring motifs in the visual world using relationships from

@VisualGenome

. Adding structure allows us to further vision research and ask questions like: "what kinds of objects usually contain food?→bowls, plates, table"

1

0

14

Men also like shopping!

#EMNLP2017

best paper reduces gender bias in visual recognition using Lagrangian relaxation.

2

3

12

We have an amazing group of speakers lined up:

Nikola Banovic

@nikola_banovic

Anca Dragan

@ancadianadragan

James Landay

@landay

Q. Vera Liao

@QVeraLiao

Meredith Ringel Morris

@merrierm

Chenhao Tan

@ChenhaoTan

0

1

13

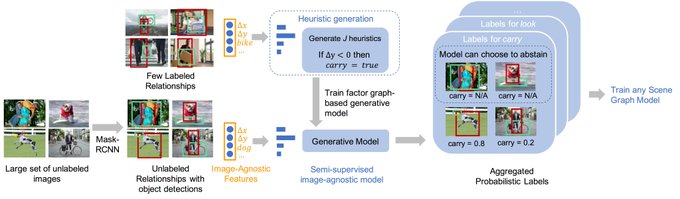

Check out our newest paper! We automatically assign probabilistic relationship labels to images and can use them to train any existing scene graph model with as few as 10 examples.

1

0

13

Congratulations

@PranavKhadpe

!!! Contrary to how today's AI products are marketed, our paper finds that people are more likely to adopt and cooperate with AI agents that project low competence but outperforms expectations and are less forgiving when they project high competence.

Excited to share that our paper, "Conceptual Metaphors Impact Perceptions of Human-AI Collaboration", was awarded an Honorable Mention at

#CSCW2020

🎇

Paper:

Want to say a huge thank you to my co-authors

@RanjayKrishna

@drfeifei

@jeffhancock

and

@msbernst

7

3

131

0

2

13

If you are the anonymous reviewer who wrote a 12 page review for my

@VisualGenome

paper, I just wanna say that you're awesome.

#bestReviewer

1

0

12

Embodied AI has been limited to simple lifeless simulated houses for many years.

Just like the Holodeck in the Star Trek episodes I grew up watching, our Holodeck system allows you to create diverse lived in 3D simulated environments populated with thousands of objects:

🛸 Announce Holodeck, a promptable system that can generate diverse, customized, and interactive 3D simulated environments ready for Embodied AI 🤖 applications.

Website:

Paper:

Code:

#GenerativeAI

[1/8]

8

101

404

0

0

21

Had a great time presenting some of my latest research in NYC.

0

1

11

Its refreshing to see corporations using their strengths/capabilities to give back to their society.

@DoorDash

just launched Project Dash () to use their network of restaurants and their suite of cars to deliver food to those who are hungry.

#dashforgood

2

4

10

Apparently, all of the research Google is doing is some kind of AI now. What happened to systems? social sciences? HCI? UX research?

INNOVATION != AI

Hmm, have I made a wrong turn? I was looking for

@GoogleResearch

…

Nope, you're in the right place! We’re unifying all of our research efforts under “Google AI”, which encompasses all the state-of-the-art innovation happening across Google. Learn more at

29

349

996

1

2

11

Very excited to see more of Ross!

Incredibly excited to announce that Ross Girshick (

@inkynumbers

) will be joining the PRIOR team

@allen_ai

!

Ross is one of the most influential and impactful researchers in AI. I'm so honored that he is joining us, and I'm really looking forward to working with him.

6

22

304

0

0

11

@christianrudder

I hope you go back and realize how uncomfortable you made people feel at

#chi2018

. Your talk was a heteronormative disaster.

0

2

10

Go check out our

#NeurIPS2019

*Oral* talk for "HYPE: Human eYe Perceptual Evaluation of Generative Models" today at 4:50pm at West Exhibition Hall C + B3. Also, HYPE now offers *evaluation as a service* for generative models at

0

2

10

Reviewing an

#ECCV2018

paper and apparently this paper beats its baselines by XXX%.

#prematureSubmission

2

2

10

Version-1.2 of

@VisualGenome

dataset has been released: MORE data, CLEANER annotations, DENSER graphs.

0

6

10