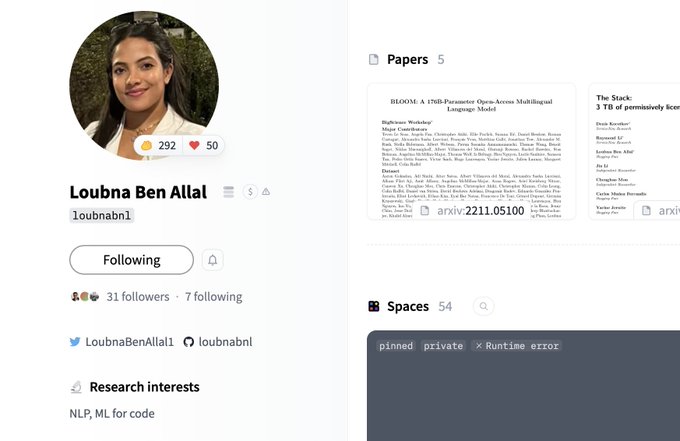

Loubna Ben Allal

@LoubnaBenAllal1

Followers

3,873

Following

636

Media

79

Statuses

543

ML Engineer @huggingface 🤗 | @ENS_ParisSaclay - MVA

Paris, France

Joined September 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

México

• 1103890 Tweets

Morena

• 688685 Tweets

casillas

• 424456 Tweets

Xóchitl

• 340352 Tweets

Flamengo

• 334593 Tweets

The Loyal Pin is Coming

• 252553 Tweets

#ปิ่นภักดิ์Q51ใกล้เสร็จแล้ว

• 246065 Tweets

緊急地震速報

• 202109 Tweets

PREP

• 154885 Tweets

Stars

• 150627 Tweets

#CH3Apologize

• 92391 Tweets

Florencia

• 84858 Tweets

地震大丈夫

• 83868 Tweets

LEALTAD FURIOSA

• 70862 Tweets

Televisa

• 66233 Tweets

$GME

• 45054 Tweets

Mario Delgado

• 44323 Tweets

アラーム

• 29349 Tweets

Martha

• 28946 Tweets

Natalie

• 27869 Tweets

アラート

• 27572 Tweets

#HomeForHomeless

• 25591 Tweets

Borja

• 25528 Tweets

Stanley Cup

• 25159 Tweets

Panthers

• 24287 Tweets

Juliana

• 24254 Tweets

Bucaramanga

• 21083 Tweets

Homely Shelter

• 20691 Tweets

渡辺くん

• 17677 Tweets

Santa Fe

• 16456 Tweets

McDavid

• 14916 Tweets

Super Over

• 14290 Tweets

トロピカルツイスト・クワトロSサイズ

• 11477 Tweets

Tommie

• 10781 Tweets

kcon

• 10104 Tweets

Last Seen Profiles

Today is my first day at

@huggingface

as a Machine Learning Research intern. I'm thrilled to be joining such an amazing team and contribute to democratizing the ML community 🤗

14

23

512

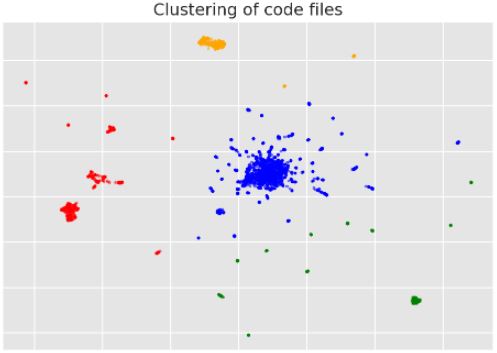

This is my second week as an intern at

@huggingface

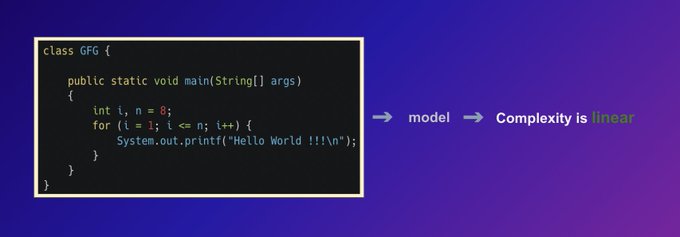

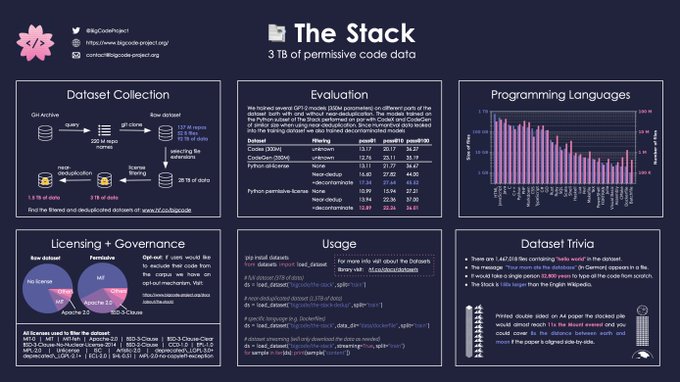

. I am working on code models and as a first project I did some exploration of a large multilingual code dataset 💻. A thread:

7

29

320

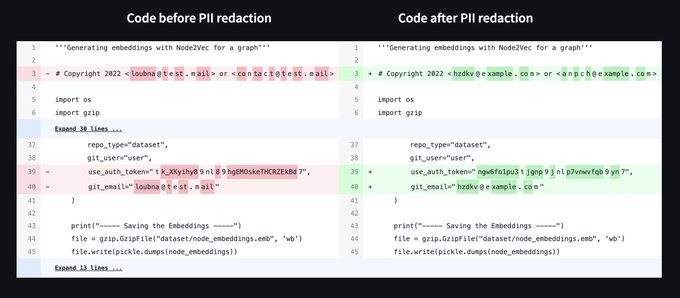

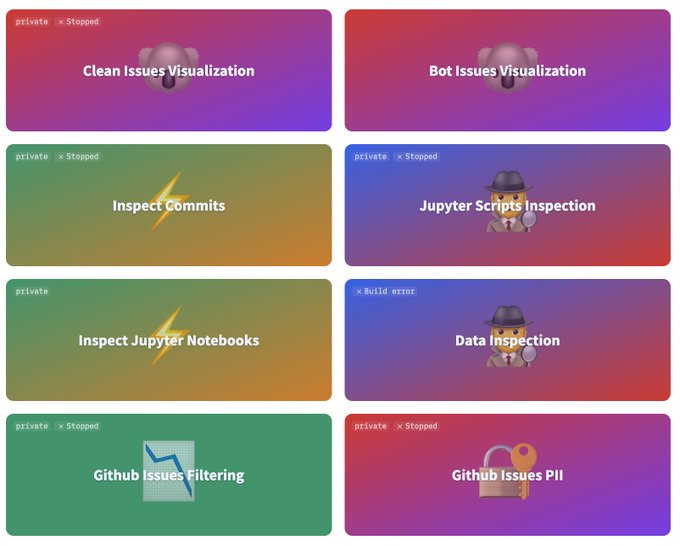

🧵 Here's how we're tackling text de-identification to remove personal information from code datasets at

@BigCodeProject

:

- An annotated benchmark 📑

- A pipeline for PII detection and anonymization 🚀

- A demo to visualize anonymized samples 🔍

(1/n)

2

36

143

@nvidia

@DBahdanau

Debugging, although complex, is effective to fix small bugs even if they don't solve the initial problem ✨. It can be tricky for training scripts, as some changes require more time for their impact to be observed. We are now ready and training more models! stay tuned 🚀 (14/14)

8

3

84

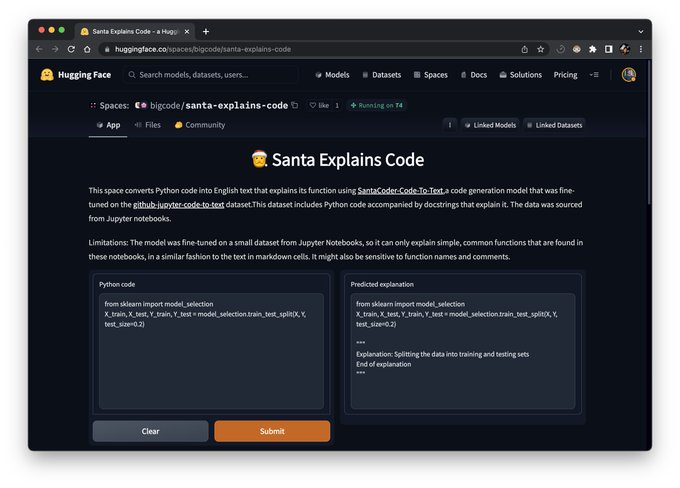

🚀 Fine-tune SantaCoder on code generation datasets with this repo:

A Google Colab by

@mrm8488

is also available.

✨ Bonus: we fine-tuned SantaCoder on Jupyter Notebooks to make it explain code

2

15

78

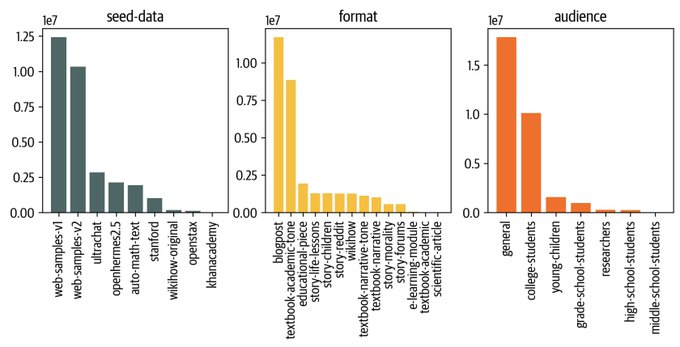

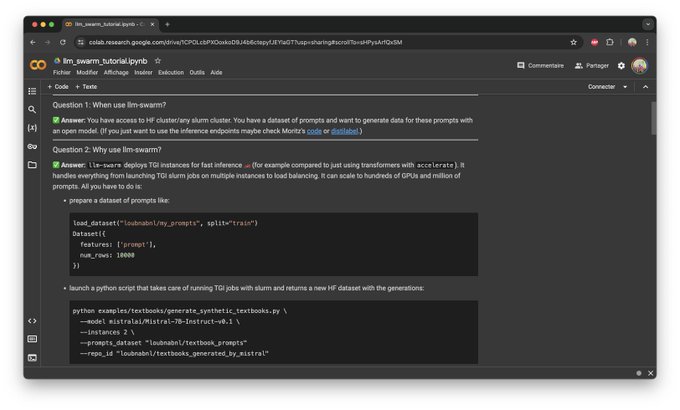

Here's the full list of the resources and links:

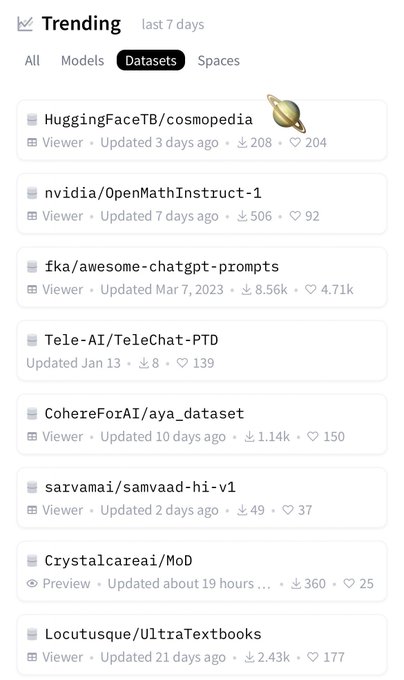

- Cosmopedia dataset:

- Cosmo-1B model:

- GitHub code:

⚡ Other libraries we used:

- llm-swarm for large scale synthetic data generation:

1

13

72

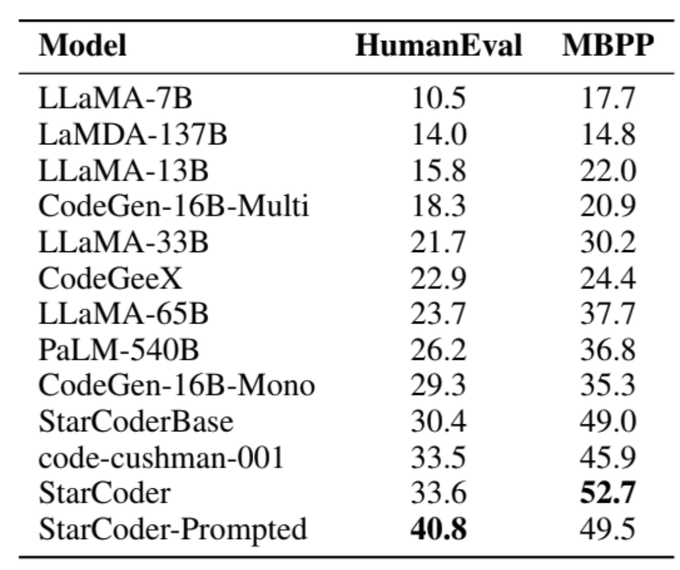

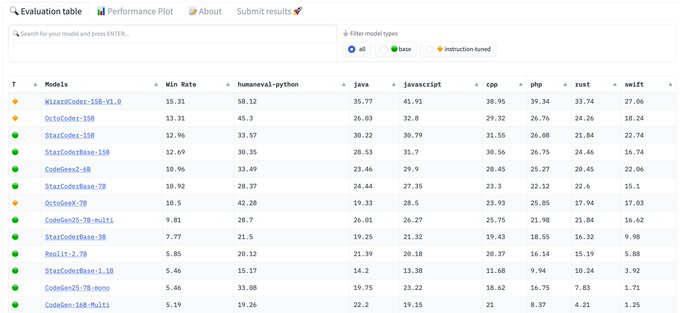

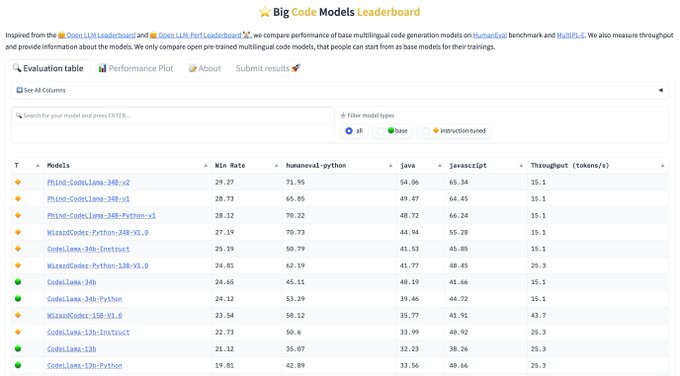

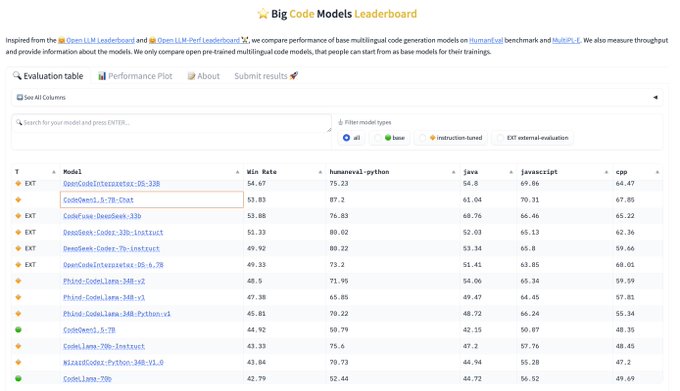

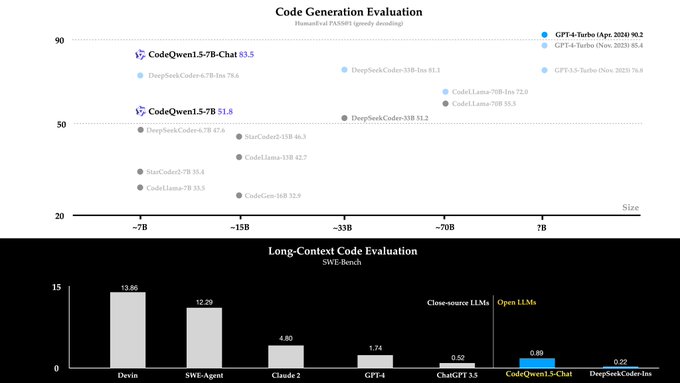

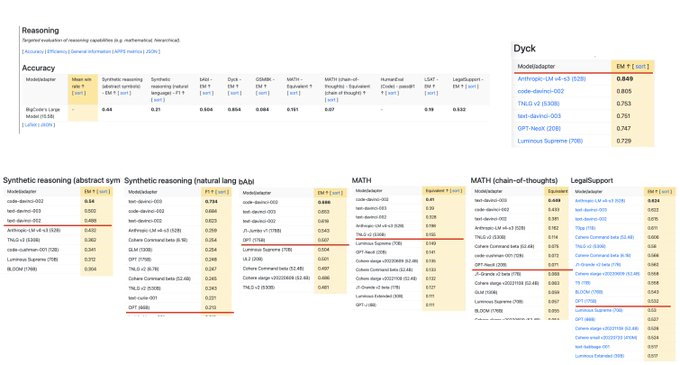

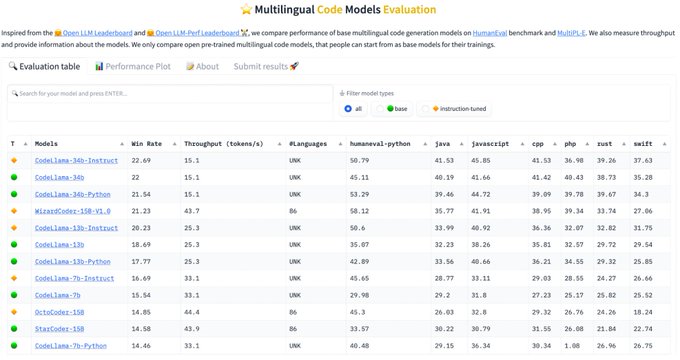

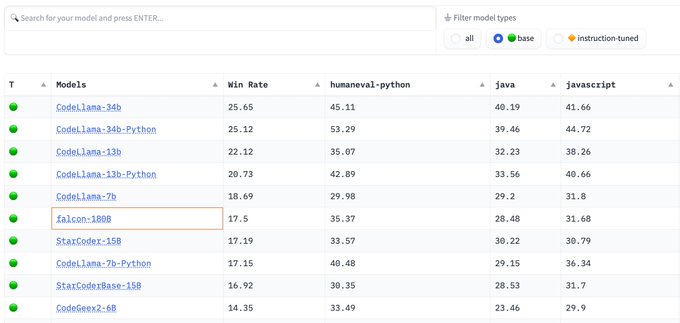

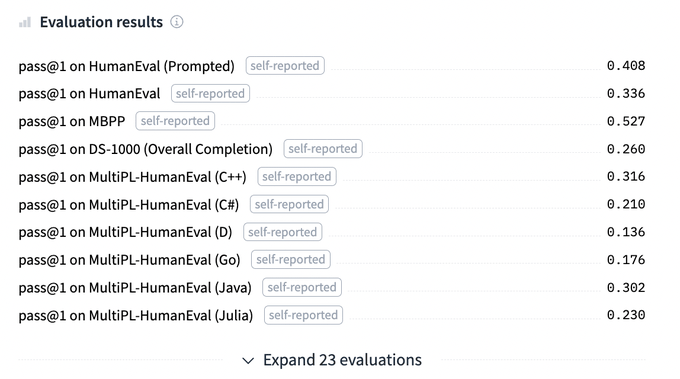

The new CodeQwen1.5-7B models rank very high on the BigCode Leaderboard, outperforming much larger models🚀

1

9

62

@nvidia

@DBahdanau

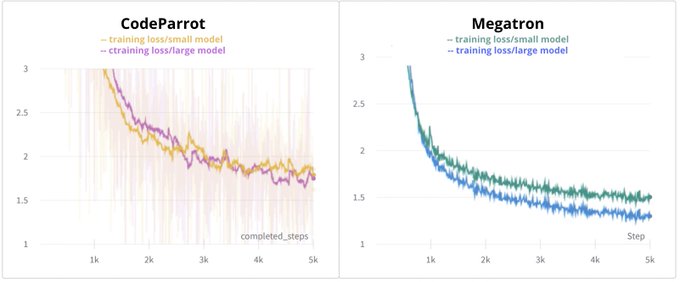

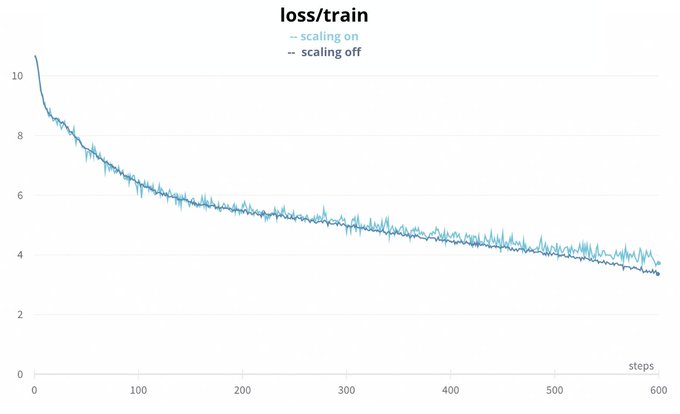

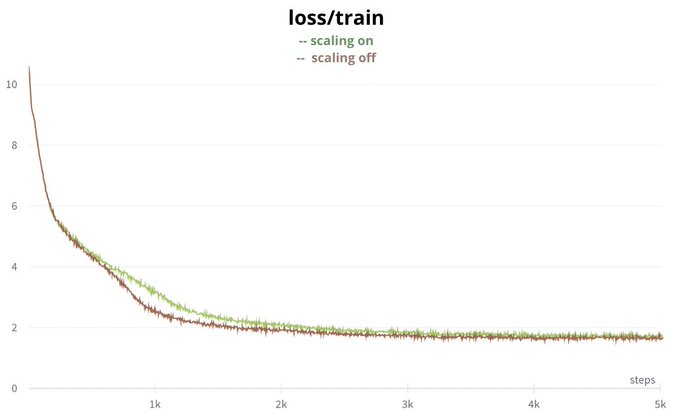

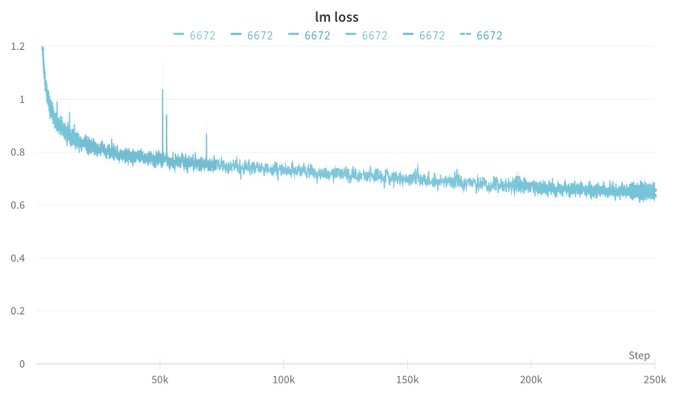

🥁 And indeed when training with all these fixes a bit longer we noticed that the discrepancy was gone and we now match Megatron's performance 🥳! (13/n)

2

2

56

@nvidia

@DBahdanau

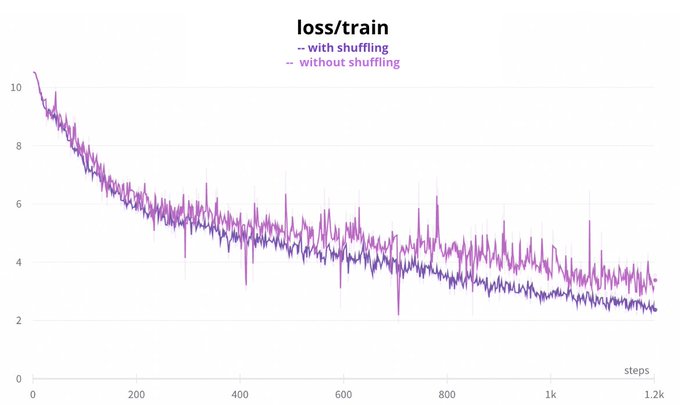

Next we tried the data loader from Megatron. Initially we had a shuffling problem (credits to

@DBahdanau

), the files were shuffled but not the sequences, so those from long files can fill up a single batch. This improved the training considerably but wasn’t enough. (7/n)

2

1

49

@nvidia

@DBahdanau

At this stage, we thought we might’ve missed something in the points above. We found there was a difference between the frameworks in the scaling of the attention weights in mixed precision. We fixed the difference and finally got a slight improvement! (9/n)

2

1

41

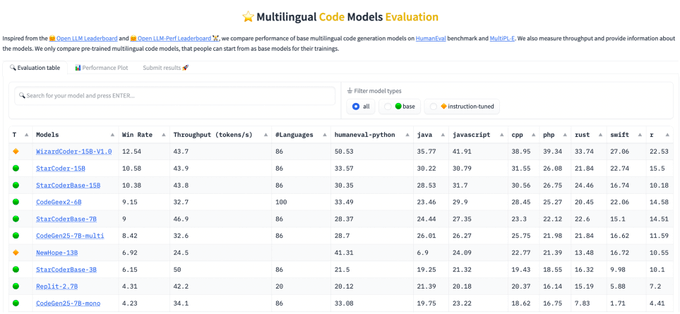

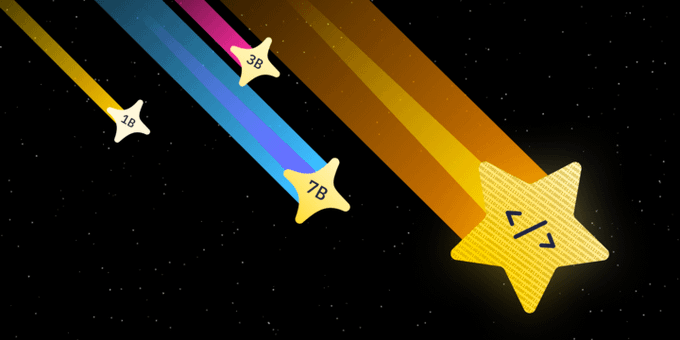

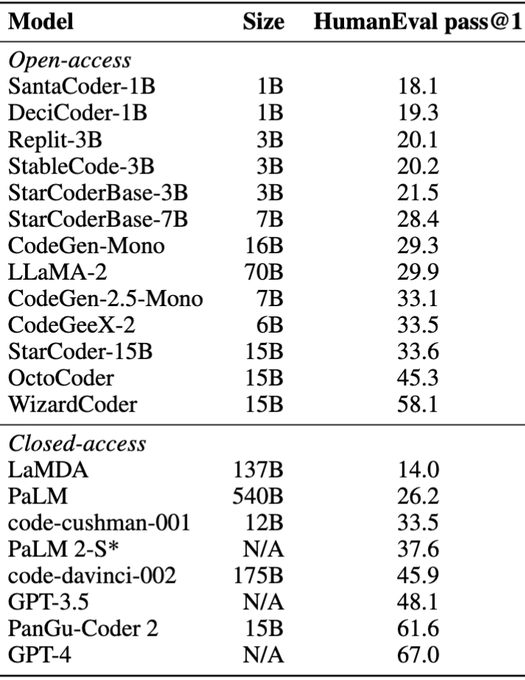

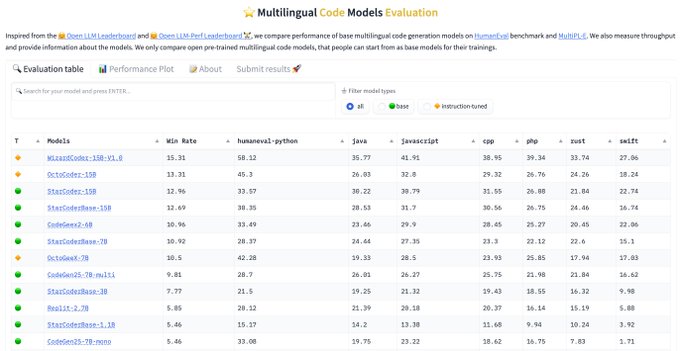

Based on popular demand, we trained smaller versions of 💫 StarCoder.

Check this leaderboard for more details on their performance compared to other base code models:

2

9

40

Last week, I gave a keynote about

@BigCodeProject

and

@huggingface

ecosystem to 1500 attendants at the

@KubeCon_

&

@CloudNativeFdn

summit and GOSIM conference in Shanghai. It was a great chance to meet the Open Source community and discuss AI!

Slides:

6

3

40

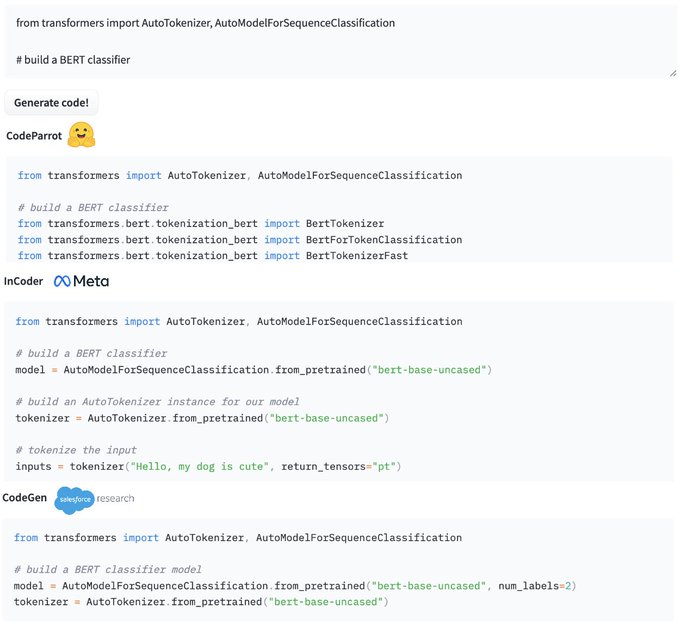

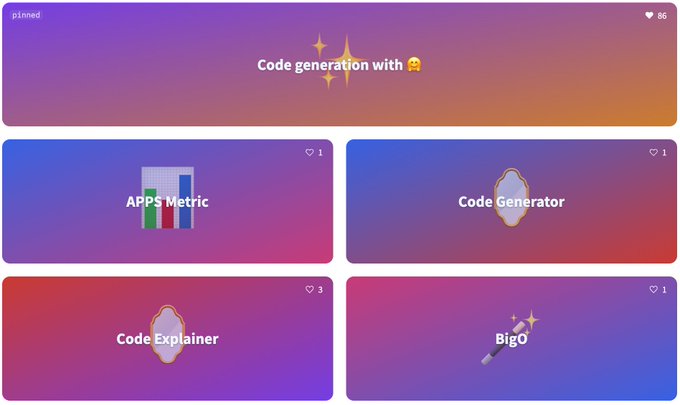

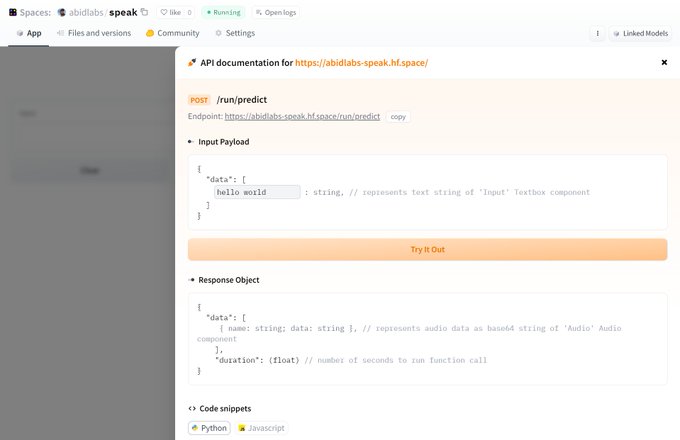

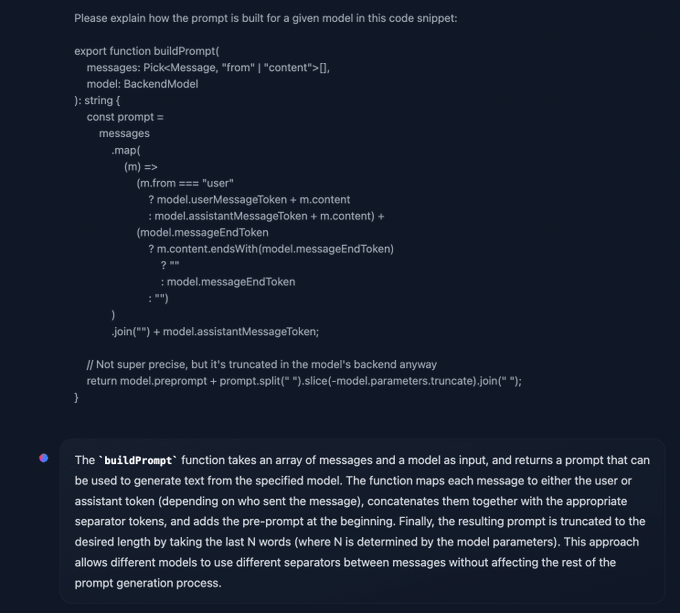

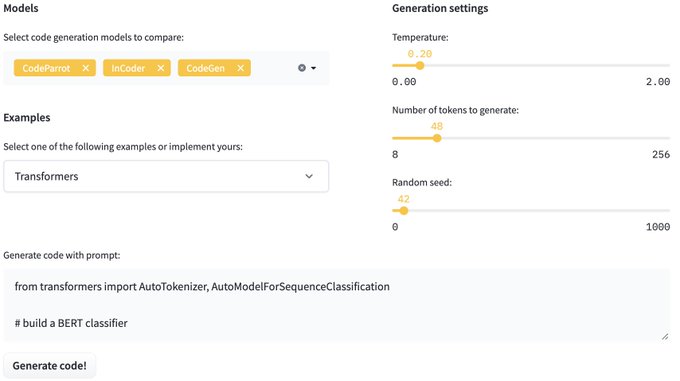

Another cool feature for Gradio. We used these API endpoints in our Code Generation blog to call 3 other spaces in parallel threads without needing to load many large models in one space.

Code Generation Blog:

CodeGen space:

Really stoked to share Gradio's new "Use via API" page

1⃣ Build a

@Gradio

app (or find one on Spaces)

2⃣ Click on the "Use via API" link in the footer

3⃣ See the expected payload and try it out immediately

4⃣ View handy code snippets in Python or JS

Embed ML everywhere!

5

24

154

0

7

39

Another architectural change which feels like a must for every new language model is Multi-Query-Attention, you can process larger batches and faster!

If you ever evaluated a code model you must know how necessary that is

2

2

35

@nvidia

@DBahdanau

First, we observed that our loss had more noise than Megatron’s. We used distributed training and we were plotting the training loss of the main worker only, plotting the average over the workers made the loss way smoother! (3/n)

1

0

35

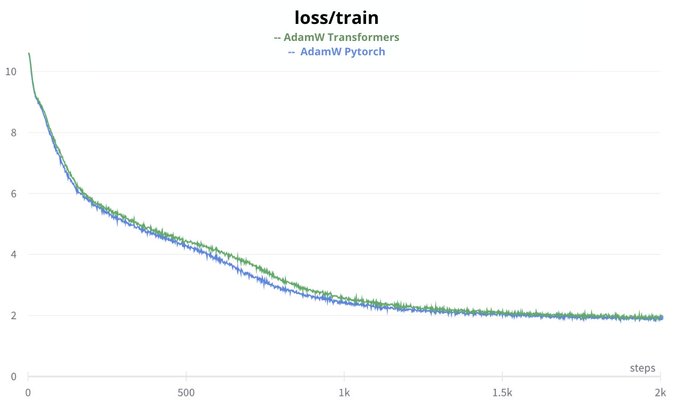

@nvidia

@DBahdanau

Then we thought maybe the optimizer is the issue: the transformers implementation of AdamW is slightly different from Pytorch and will be deprecated. Switching to AdamW from Pytorch exhibits better behavior after the warmup stage but then the performance becomes similar. (11/n)

1

0

34

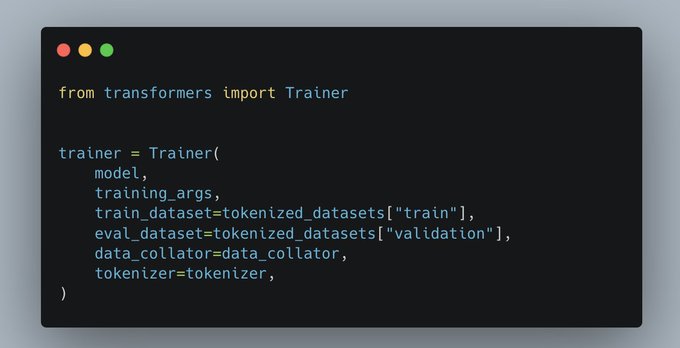

@nvidia

@DBahdanau

The only thing left to check was the rest of the training script. We found a bug in the weight decay! However it didn’t seem to impact the training on the short run. We also used 🤗 Trainer to replace our training loop in case there was another bug, but there wasn’t. (8/n)

2

1

33

@Grady_Booch

We also do have a lot of incorrect, wrong & inappropriate information on the web, that is used to train today's LLMs. At least with synthetic data you can have some control over what you generate. Hallucination remains an issue for sure, there are methods to attenuate it like

3

0

33

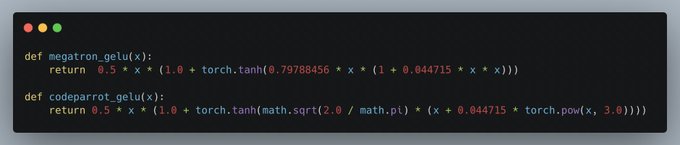

@nvidia

@DBahdanau

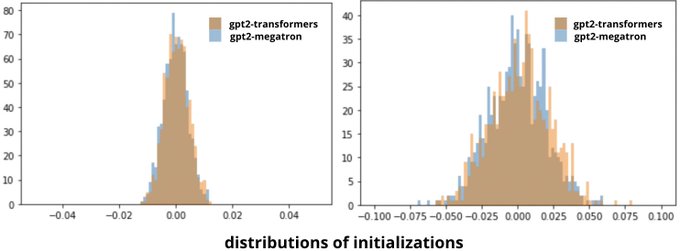

Naturally we compared the architectures of GPT2 in both frameworks to look for any differences. The only difference we found was in the GELU activation function, they use slightly different implementations, but this didn’t impact the training. (5/n)

1

0

32

@nvidia

@DBahdanau

But we got excited too early, after some discussions with the authors of this change they explained that it doesn't impact the training in the long run. And indeed, going beyond 2000 steps makes the gap go away. (10/n)

1

1

32

Megatron is a framework developed by

@Nvidia

for training large transformer models.

@DBahdanau

observed a training gap for CodeParrot, a GPT2 model for code generation, between Megatron and our script in transformers. (2/n)

2

0

32

@nvidia

@DBahdanau

Another candidate for the difference was the optimizer, we used AdamW from transformers, while in Megatron they used AdamW from Apex. We trained the model with the latter but it didn’t seem to solve the problem 😓 (6/n)

1

0

32

@nvidia

@DBahdanau

Then we suspected that the initialization weights could be different between the two frameworks, but it turned out they followed the same distributions. (4/n)

1

1

31

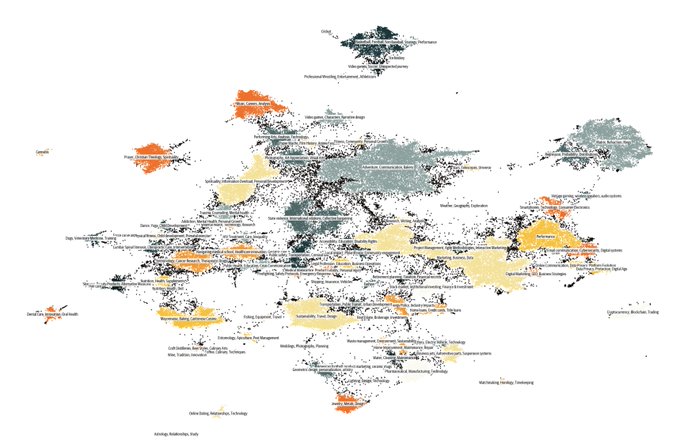

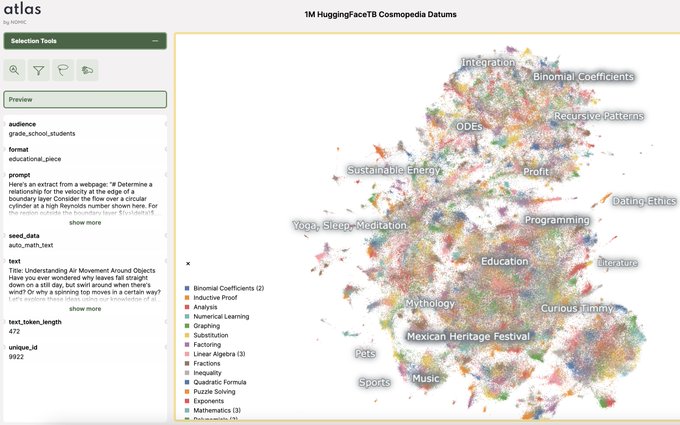

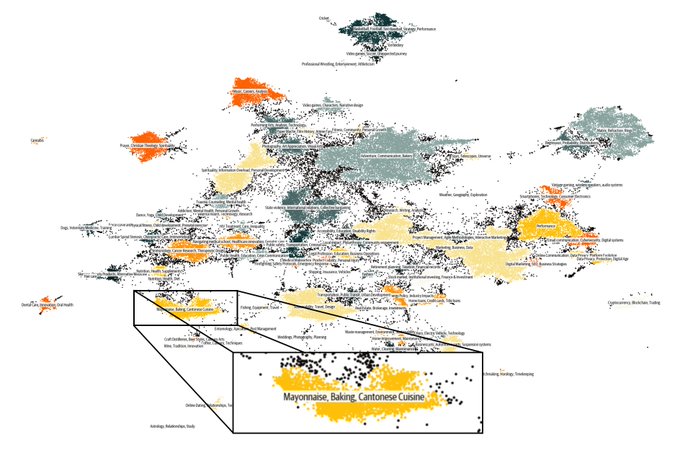

Here's a nice tool for visualizing and clustering your dataset into topics 🔍

We used it to understand topic coverage and filter web samples when building Cosmopedia:

1

1

28

If you contributed code to GitHub and permissively licensed it, you might help build the next generation of code language models 🚀

0

4

29

Join us tomorrow to learn more about StarCoder 💫

Can't wait to hear about StarCoder from

@LoubnaBenAllal1

! ICYMI, StarCoder is a code LLM from Hugging Face with 15B params & 8k context, trained on 1T tokens of permissive data in 80+ programming languages.

Starts in 24 hrs: May 16, 9am PST. RSVP below.

2

6

27

0

4

26

🇲🇦 Proud to see this platform we developed with fellow Moroccans help in earthquake relief efforts. To contribute please visit:

We have a map for coordination + forms and a WhatsApp bot for victims, witnesses & NGOs:

Our very own

@Nouamanetazi

was on Moroccan national television

@2MInteractive

to talk about the

@huggingface

Space he and

@LoubnaBenAllal1

created to aid in

#MoroccoEarthquake

relief efforts🙏

Link to Space:

1

4

31

0

5

27

@nvidia

@DBahdanau

We were quite desperate already but we hoped some of these changes would exhibit a difference in a longer training 🤞 (12/n)

1

0

26

Check our blog for details on the new Code LLaMa family:

We also updated the Code Leaderboard to integrate all 9 models:

Code Llama with

@huggingface

🤗 Yesterday,

@MetaAI

released Code Llama, a family of open-access code LLMs!

Today, we release the integration in the Hugging Face ecosystem🔥

Models:

👉

blog post:

👉

Blog post covers how to use it!

7

80

297

1

2

23

Next Tuesday, I will give a webinar hosted by

@AnalyticsVidhya

on the training of LLMs for code, like StarCoder.

I will also discuss how to leverage these models using open-source libraries such as transformers, datasets and PEFT.

Register here: .

1

3

23

Honored to have given a talk at

@KTHuniversity

about Machine Learning for Code at

@Huggingface

with CodeParrot & BigCode.

🦜 For educational tools about code models:

🌸 For some state-of-the-art code datasets and models:

1

6

23

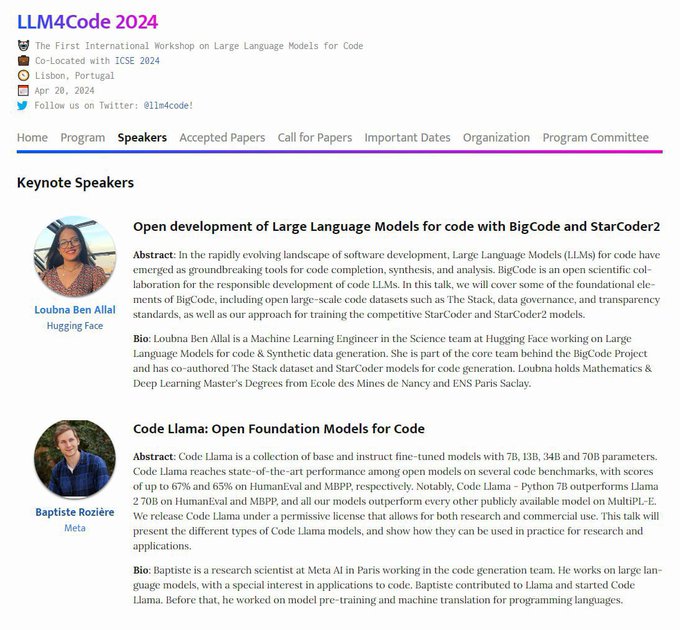

Happy to be speaking at

@llm4code

workshop!

Very excited to share the 1st

@llm4code

workshop has attracted 170+ registrations!

If you’re at

@ICSEconf

and interested in LLMs, pls join us on April 20, we have 24 presentations and two keynotes on Code Llama (

@b_roziere

) and StarCoder2 (

@LoubnaBenAllal1

)!

#icse24

#llm4code

1

5

44

2

2

20

@linoy_tsaban

and I already got our 🎃 Halloween photo at: try it out!

Introducing 🎃🦇 the AI Halloween Photobooth! 🦇🎃

Turn into a Spooky Skeleton💀✨ or a PS1 style vampire 🎮🧛

From

@linoy_tsaban

and I, powered by LEDITS 🎨: spooky iteration of what we had

@ICCVConference

/

@huggingface

Paris event🕸️🕷

Go play! ▶️

4

14

61

0

5

19

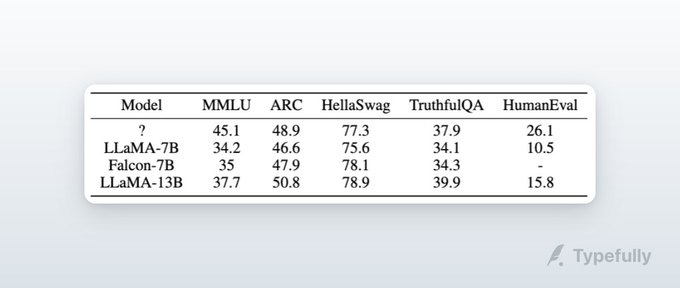

@Thom_Wolf

@radAILabs

@Dr_Almazrouei

@TIIuae

@huggingface

It has 35.37% pass

@1

on HumanEval. You can also compare it to code models on 10+ programming languages in the Big Code Models Leaderboard:

2

4

16

Happy to see our models and datasets getting adopted by the community 🚀

1

1

18

Thank you for the invitation, it was a pleasure to give this webinar 🤗

Thrilled to announce the success of our recent

#webinar

on Generative Models with Loubna Ben Allal.

If you missed it, watch the recording here:

Take our survey to improve future events, & stay tuned for more!

#MoroccoAI

#AI

#NLP

0

3

18

1

1

18

Happy to see The Stack released 🚀

For more information about the dataset and models we trained checkout this paper

1

2

17

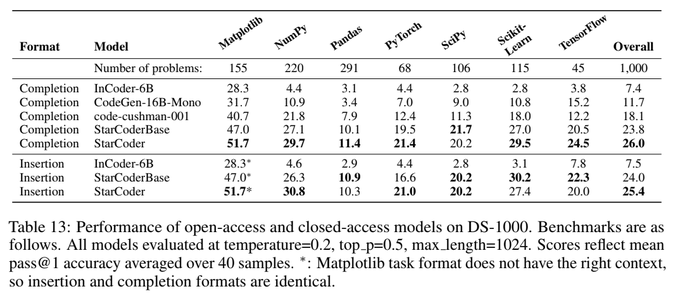

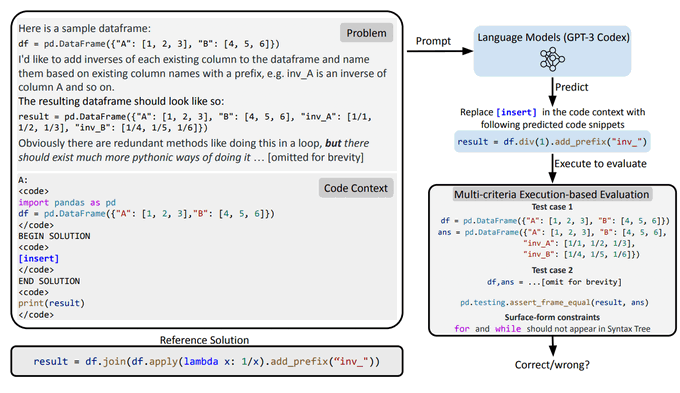

DS-1000 is a realistic Python benchmark of Data Science use cases based on Stack-Overflow questions. It consists of 1000 problems spanning 7 widely-used libraries, and it was developed by

@HKUniversity

NLP Group.

1

3

16

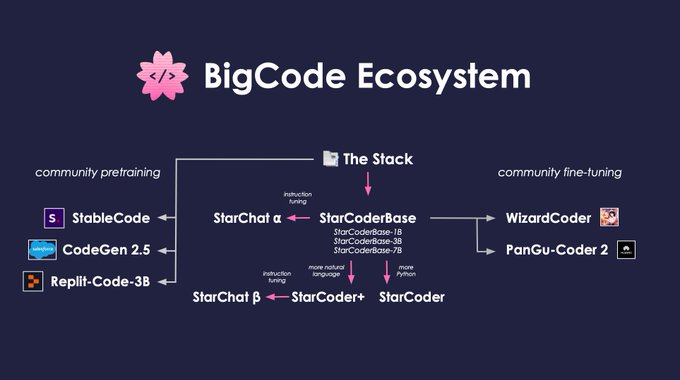

Check our latest StarCoder descendants: StarCoder+ and StarChat Beta, a strong chat assistant with high coding capabilities 🚀

1

3

15

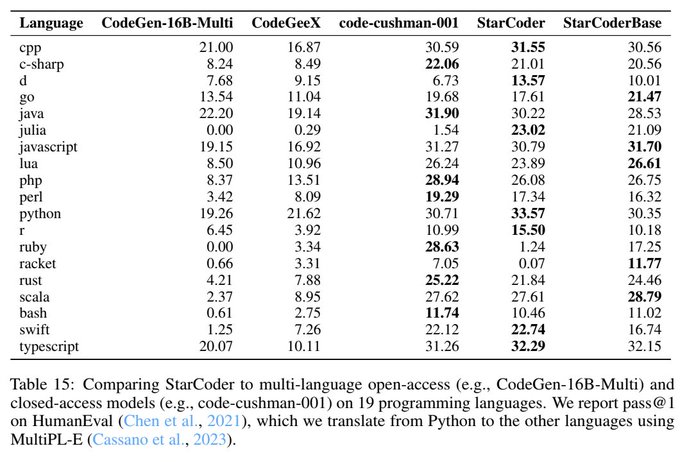

MultiPL-E is the translation of HumanEval to 18 programming languages by

@northeasterm

Programming Research Lab.

It powers the Multilingual Code Evaluation leaderboard

1

2

14

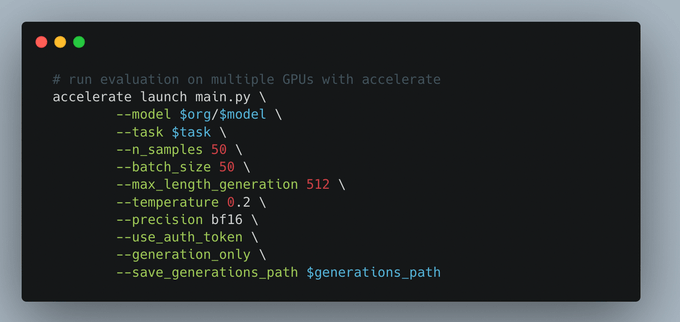

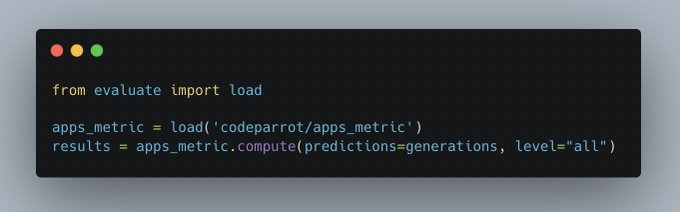

In BigCode we developed a code evaluation harness to facilitate this task:

@MosaicML

is developing an end-to-end solution for code evaluation:

1

2

13

@d_aumiller

Indeed it can be expensive which is why we trained for a few steps to test the changes (but this can also lead to false conclusions). I think it’s important to split the problem in small pieces and keep track of everything that was tested an the order of the tests

0

0

13

Join us on the BigCode journey 🚀 and contribute to the next language model for code.

Together we will address the challenges of this field in an open and responsible way 🌸.

print("Hello world! 🎉")

Excited to announce the BigCode project led by

@ServiceNowRSRCH

and

@huggingface

! In the spirit of BigScience we aim to develop large language models for code in an open and responsible way.

Join here:

A thread with our goals🧵

5

74

215

1

1

13

@Jakewk

It's textbooks generated by an LLM, there's some hallucination for sure, but the performance of the model we trained on the dataset suggests there's a large chunk is accurate. You can inspect some samples here:

3

1

12

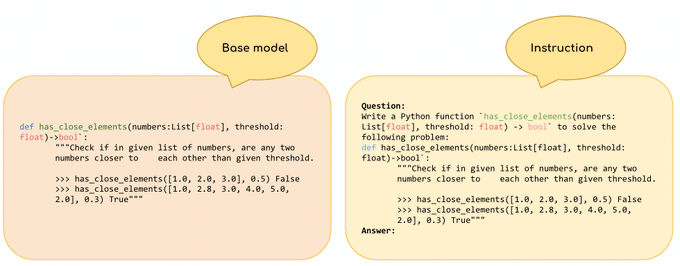

That's where functional correctness shines, we test model generations against unit tests, like humans would. And we report a score called pass

@k

.

➡️ HumanEval = 164 Python programs with 7.7 tests per problem in average.

1

1

11

The instruction-tuned version of StarCoder2 is out by the HuggingFaceH4 team 🚀

0

1

9

@GrantDeLozier

@Thom_Wolf

It was actually a bug in the weight decay not LR, because of a typo it was also applied to LayerNorms that are normally excluded. And yes we do plot the learning rate curves it’s good practice to follow how it changes

1

1

9

Check starcoder.cpp by

@Nouamanetazi

to run StarCoder at lightning-fast speed! ⚡

1

2

9

@thukeg

Great work! We added HumanEval-X to the Hugging Face hub and we can transfer it to your HF organization . It would be great to have the models there too!

3

1

10

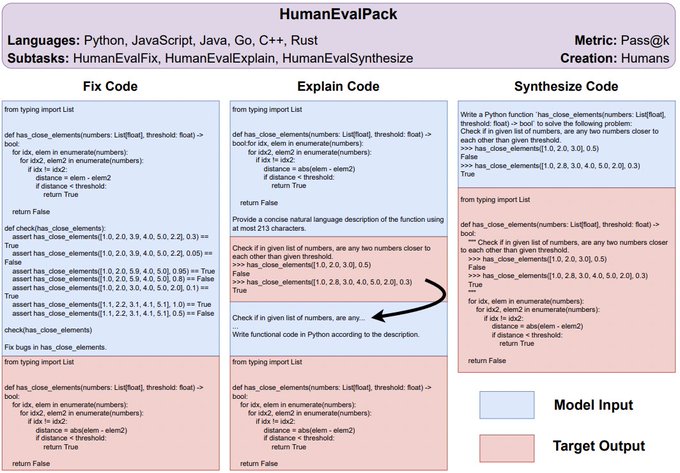

There are also other benchmarks for testing tasks like Program Repair and Code Explanation within HumanEvalPack thanks to

@Muennighoff

& team's work in

@BigCodeProject

.

1

3

9